Registration and Welcome

Overview

Teaching: min

Exercises: minQuestions

Objectives

Registration

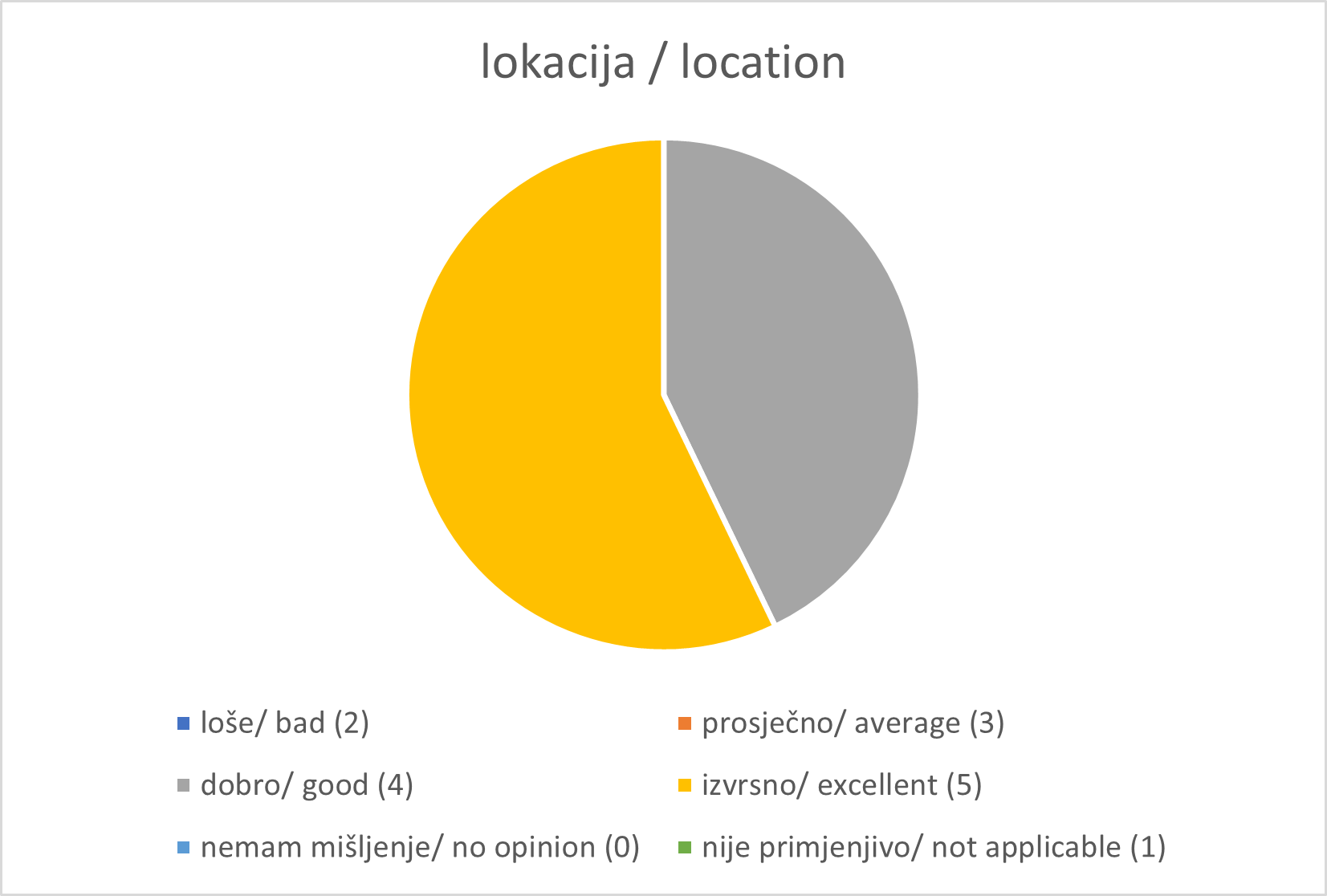

You can find the registration desk at the 3rd floor of ZG Tower Annex, Big conference hall (Velika dvorana))

Welcome

Welcome by Gabrijela Šestani

Načelnica Sektora za prirodu / Head of the Nature sector

Zavod za zaštitu okoliša i prirode / Institute for Environment and Nature

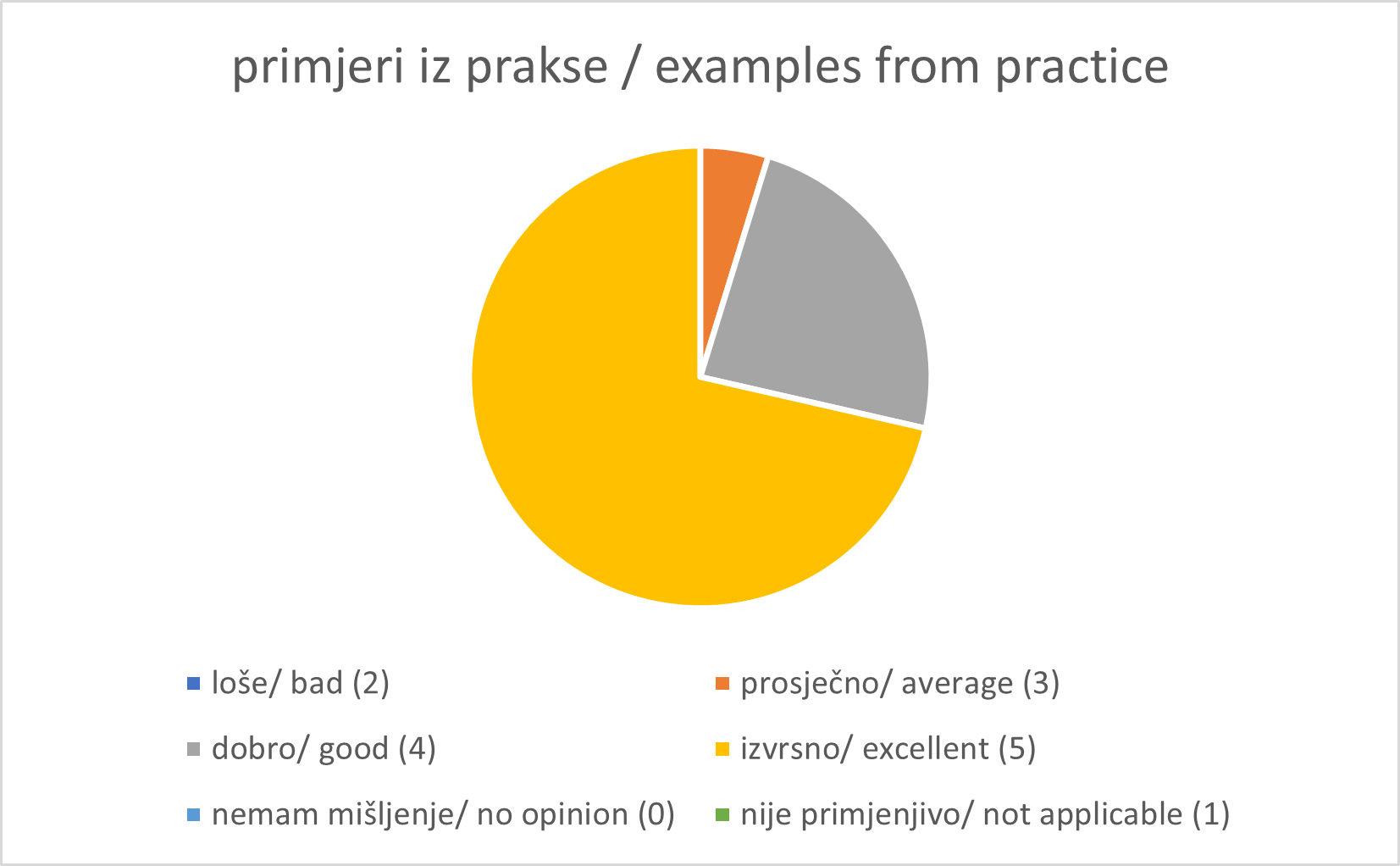

Pre-workshop survey

Key Points

Introduction to GBIF

Overview

Teaching: 45 min

Exercises: 30 minQuestions

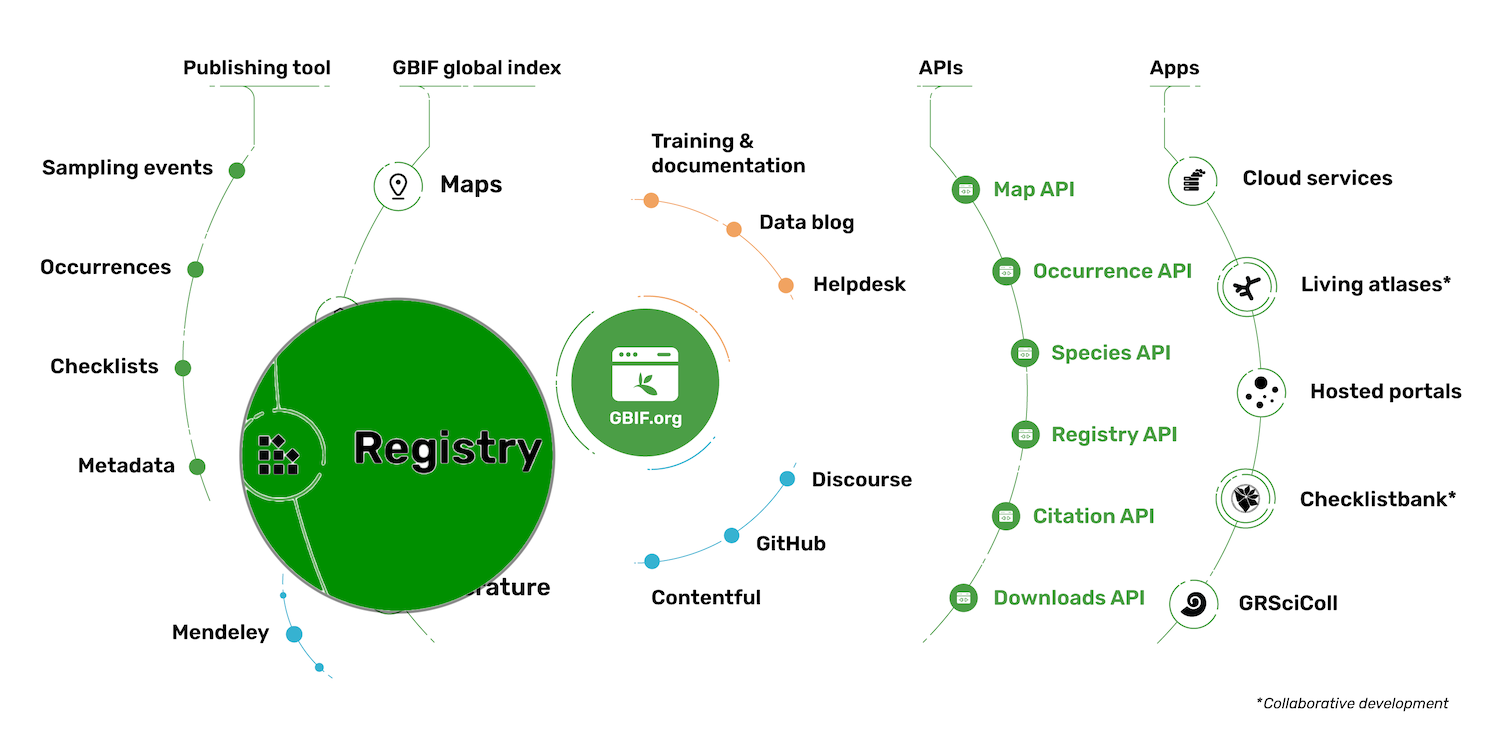

What is GBIF?

How is GBIF organized

The GBIF portal

Objectives

Understand how GBIF works.

Understand how GBIF is organized.

Basic understanding of the GBIF portal

GBIF—the Global Biodiversity Information Facility—is an international network and data infrastructure funded by the world’s governments and aimed at providing anyone, anywhere, open access to data about all types of life on Earth.

About

The Global Biodiversity Information Facility (GBIF) is an international network of country and organizational Participants that exists to enable free and open access to biodiversity data from all sources and to support biodiversity science, environmental research, and evidence based decision-making. GBIF operates as a federated system of distributed data publishing efforts, coordinated through a global informatics infrastructure and collaborative network. In this module, you will learn more about GBIF.

GBIF introduction movie

In this video (03:23) you will learn about GBIF through animation. It is a co-production of SiB Colombia and GBIF Spain, both GBIF national nodes, with co-funding from GBIF Spain and Instituto de Investigación de Recursos Biológicos Alexander von Humboldt (IAvH). The video is in Spanish, but subtitles are available.

What is GBIF video

In this video (07:55), Tim Hirsch, Deputy Director of the GBIF Secretariat, provides you with an overview of GBIF.

GBIF Overview

GBIF updates a set of overview slides with key information on a quarterly basis. The deck provides an overview of the network status, data use, access and publication, and the latest news and announcements.

Presentation

Exercises 1: Investigate how your country/organization is represented in GBIF

The GBIF website has pages dedicated to countries/economies, including for countries/economies that do not yet participate in GBIF. You can use the search box on the homepage to look up a country/organization.

- The tabs on these pages provide a general description of:

- data available about the biodiversity of the country

- data published by your country/organization,

- the participation of the country/organization

- other relevant information on the use of data by researchers in the country/organization.

- Look up your country on the GBIF website.

- Is a list of introduced and invasive alien species available for your country?

- How well does the information available on GBIF reflect what you know about the biodiversity of your country?

- Do you see publications from/about your country?

- Download the Croatia Activity report

Solution

- Find Croatia on this map

- [Global Register of Introduced and Invasive Species- Croatia] (https://www.gbif.org/dataset/f82a4f7f-6f84-4b58-82e6-6b41ec9a1f49)

- Note your idea about this

- publications

- activity report

Exercises 2: Downloading data from GBIF

The GBIF website has pages dedicated to data retrieval and downloading.

- Find and download this occurrence only dataset from GBIF: “Tipuloidea of Martinique - 2016-2018”

- Find and download this sampling event dataset from GBIF: “Meetnetten.be - Chorus counts for Amphibia in Flanders, Belgium’

- Find and investigate this checklist on GBIF: https://www.gbif.org/dataset/fd004d9a-2ea4-4244-bb60-0df508d20a15

How many times is this dataset cited?

How many occurrences are in this dataset?

Are all the occurrences georeferenced?

What is the licence on the dataset?

Which sampling method was used to collect the specimen?

Is there any media vailable about this dataset?

How should you cite this dataset?

What is the DOI of this dataset?

Solution

url

5

282

all georeferenced Check issues and flags

282 occurrences where ‘Licence’ equals: CC0 1.0

During the 2018 expedition, the main collecting methods were coloured pan traps and sweep nets. In 2016-2017, also Malaise traps were in operation.

Mederos J, Pollet M, Oosterbroek P, Brosens D (2023). Tipuloidea of Martinique - 2016-2018. Version 1.10. Research Institute for Nature and Forest (INBO). Occurrence dataset https://doi.org/10.15468/s8h9pg accessed via GBIF.org on 2024-02-12.

https://doi.org/10.15468/s8h9pgurl

33

710

all georeferenced Check issues and flags

710 occurrences where ‘Licence’ equals: CC0 1.0

url Piesschaert F, Speybroeck J, Brosens D, Westra T, Desmet P, Ledegen H, Van de Poel S, Pollet M (2023). Meetnetten.be - Chorus counts for Amphibia in Flanders, Belgium. Version 1.17. Research Institute for Nature and Forest (INBO). Sampling event dataset https://doi.org/10.15468/d4bu8j accessed via GBIF.org on 2024-02-12. https://doi.org/10.15468/d4bu8jurl

0

0 This is a checklist

nothing georeferenced, but the target area of this checklists is Flanders, Belgium

*CC0 1.0

No

Branquart E, Adriaens T, Desmet P, Devisscher S, D’hondt B, Denys L, Dumortier A, Latli A, Oldoni D, Packet J, Scheers K, Vanderhoeven S, Vermeersch X, Willeput R (2023). RIPARIAS target species list. Version 1.47. Research Institute for Nature and Forest (INBO). Checklist dataset https://doi.org/10.15468/p4ugqr accessed via GBIF.org on 2024-02-12. https://doi.org/10.15468/p4ugqr

Key Points

The GBIF portal is the place to find biodiversity data

Break

Overview

Teaching: min

Exercises: minQuestions

Objectives

Key Points

The GBIF nodes, governance and network

Overview

Teaching: 45 min

Exercises: 0 minQuestions

How is GBIF organized

Objectives

Understanding the GBIF governance and bodies

1: GBIF participation

Exercise 1: Investigate Croatia participation in GBIF

- Find out information on Croatia on GBIF.org

- In which regional network is Croatia?

- Explore Croatia Country page

Solution

- Europe and Central Asia

2: The Nodes

A node is a team designated by a Participant to coordinate a network of people and institutions that produce, manage and use biodiversity data, collectively building an infrastructure for delivering biodiversity information.

Nodes meets every year either globally or regionlly.

Exercise 2: Seek information on the Croatian Node

- When did Croatia joined the GBIF network?

- Who is the contact person for the Croatian Node?

- How much data publishers from Croatia?

Solution

- 2022

- No contact details

- Two data publishers

3: GBIF Governance

Exercise 3: Governing Board and Standing Committee

- Which country did host the two last Governing Bards?

- Who is the Chair of the Science Committee?

Solution

- Australia in 2023 & Belgium in 2022

- Birgit Gemeinholzer is Science Committee Chair

Key Points

The GBIF Participants

The GBIF Nodes network

The GBIF Governance

Lunch Break

Overview

Teaching: min

Exercises: minQuestions

Objectives

Key Points

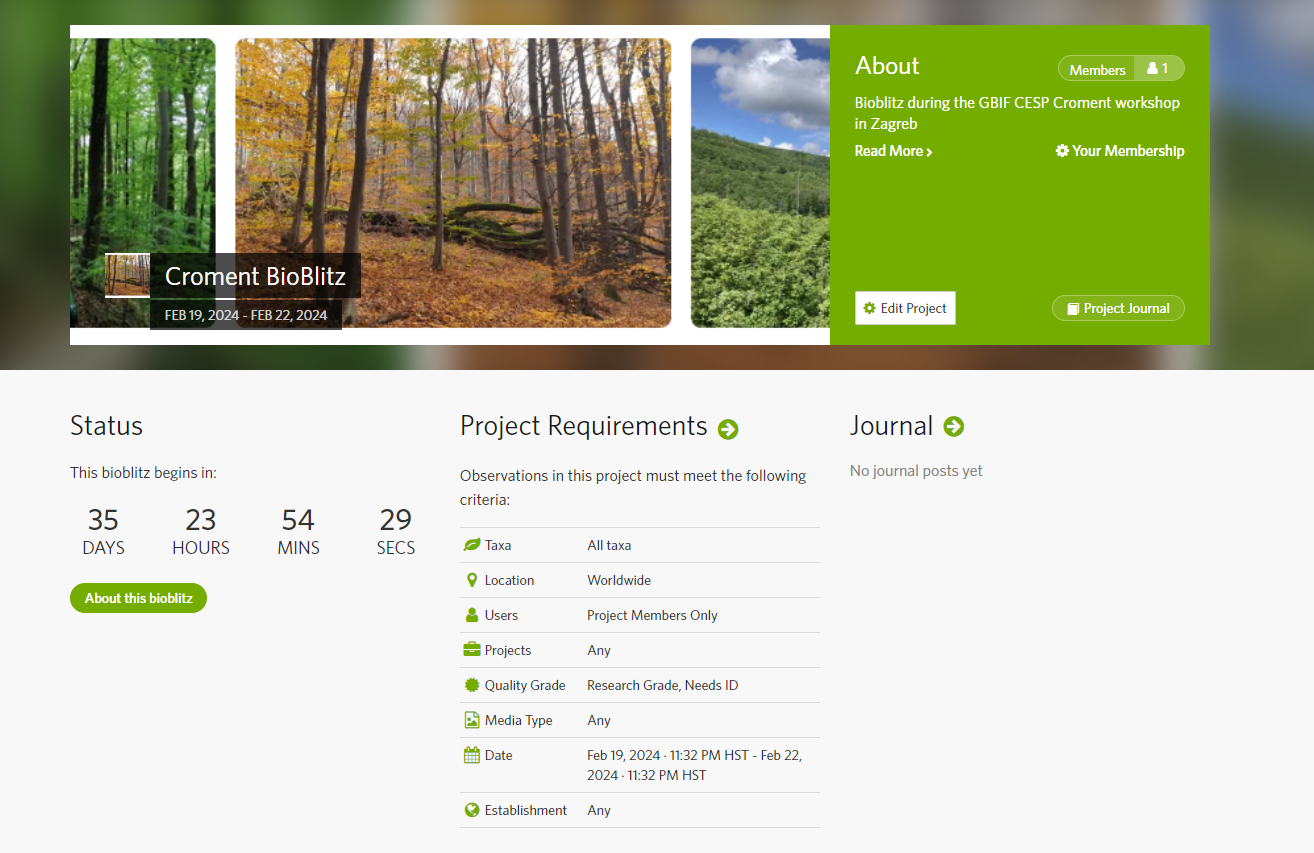

Croment BioBlitz

Overview

Teaching: 10 min

Exercises: 5 minQuestions

As a citizen scientist, how to share your observations with GBIF.

Objectives

Initiate Croment Bioblitz.

Bioblitz

Action : Croment Bioblitz

The INaturalist website is the place where you will start your Bioblitz

- Go to inaturalist.org and register

- Go to the tab project and search for Croment

- Click on Croment Bioblitz and join

- Check this tutorial video

- How many observations from Croatia are already in INaturalist?

- And how many of them are on GBIF?

TASK

- Go out in nature

- Add observations to INaturalist

- Check the competition

SOLUTION

- Check INaturalist

- Check GBIF

Key Points

Citizen Science & Biodiversity Data

What data is already available from iNaturalist

Belgian Node

Overview

Teaching: 30 min

Exercises: minQuestions

How is the Belgian node organized?

Belgium in the world of Biodiversity data

Objectives

History of the Belgian Node

How the Belgian node is organized.

Presentation Belgium in the world of Biodiversity data

Excercise:

- How many data publishers are active in Belgium?

- How many datasets do contain records about Belgium?

- How many datasets are published by Belgium?

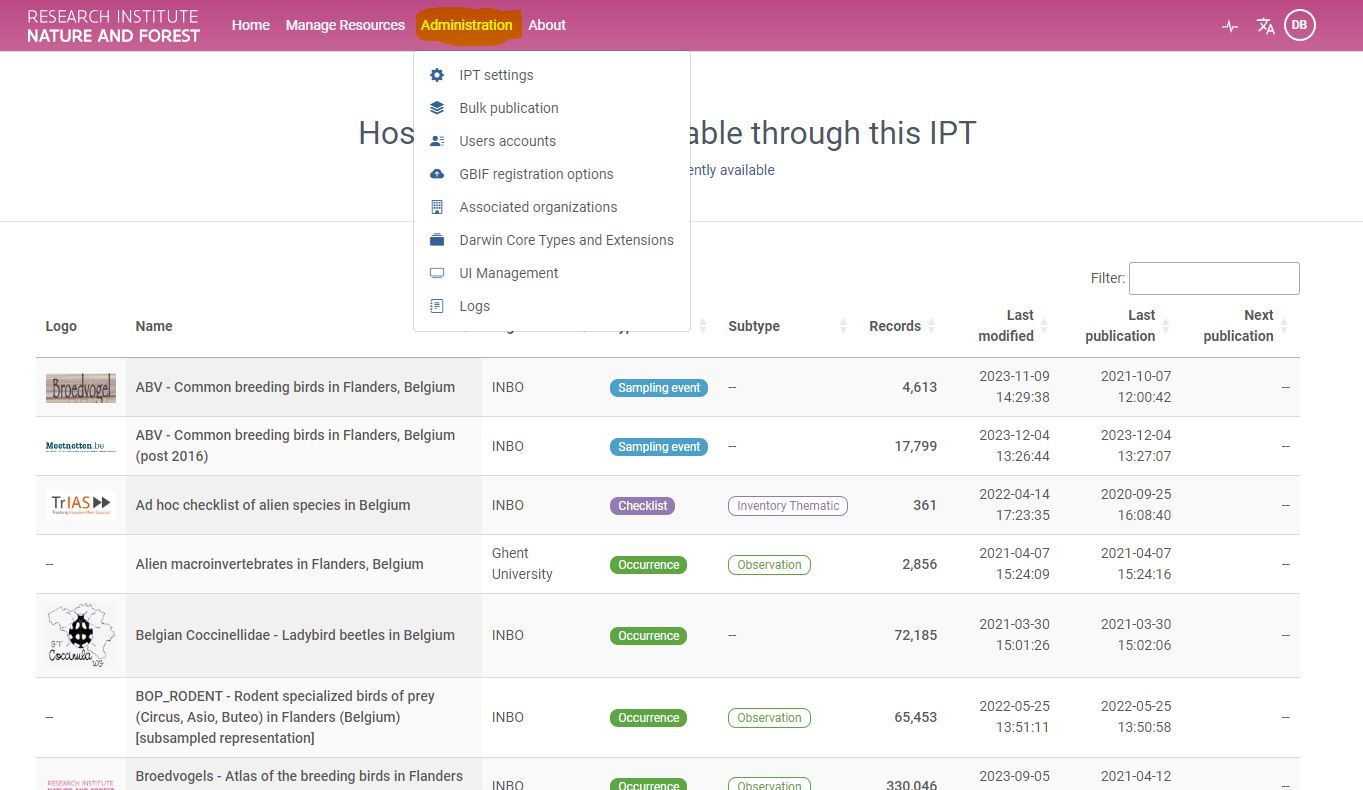

- Navigate to the INBO (Research Institute for Nature and Forest) IPT and check how many publishers are making use of this IPT instance

- Navigate to www.biodiversity.be and find GBIF info

SOLUTION

- 27

- 1274

- 536

- 8 (Check here)

Presentation : Engaging research communities for data mobilization and use: The GBIF node in Belgium

Key Points

The GBIF portal is the place to find biodiversity data

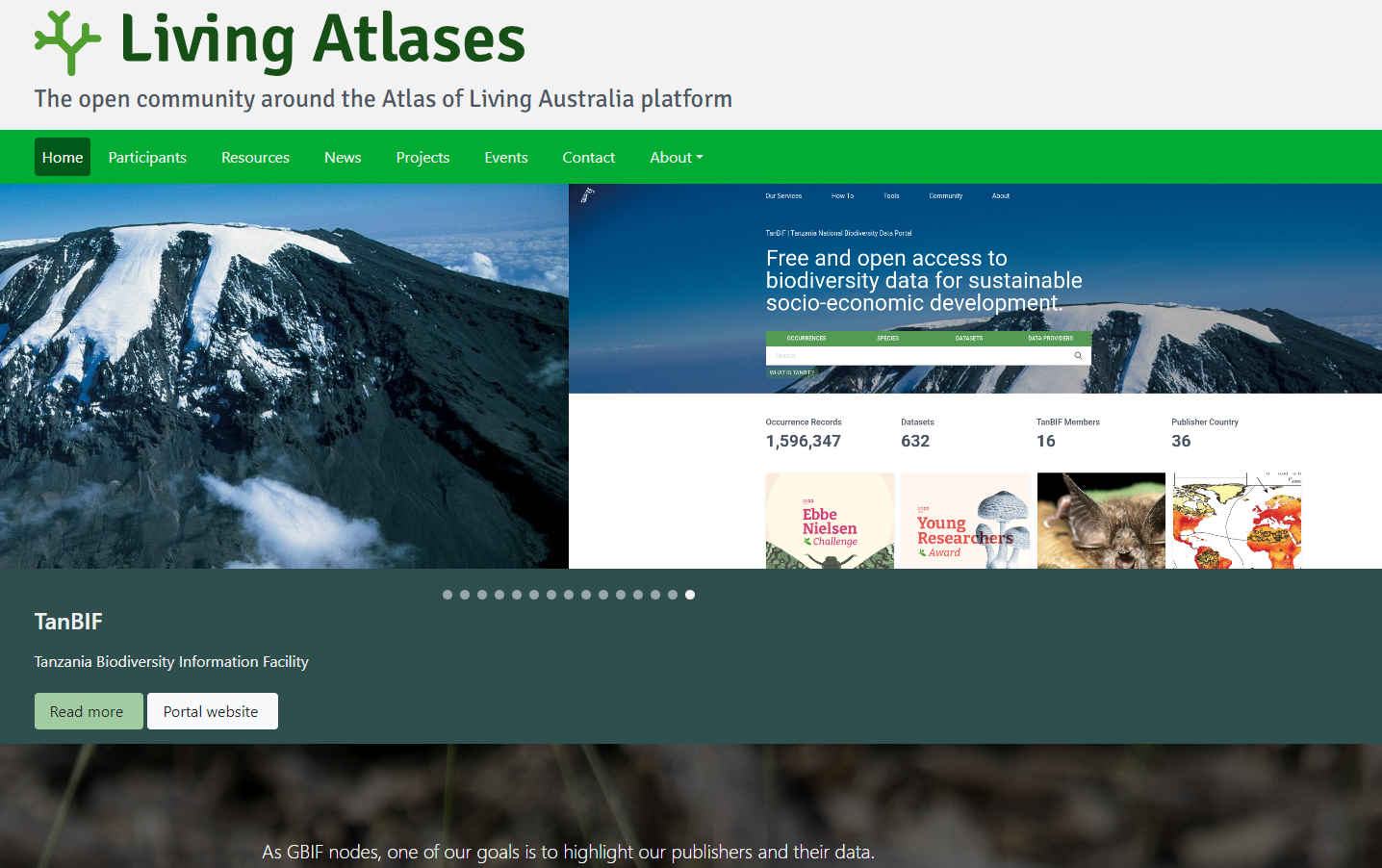

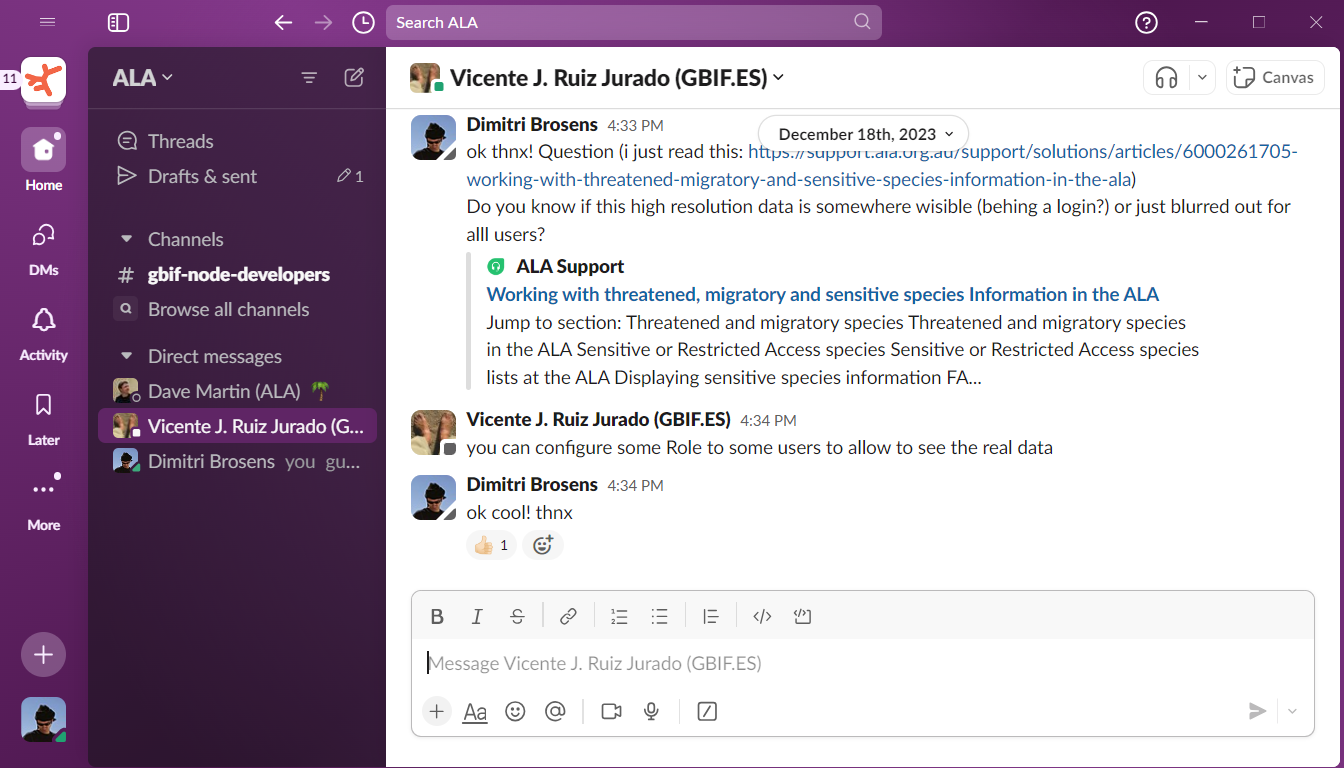

Living Atlasses & Croatian GBIF node

Overview

Teaching: 60 min

Exercises: 0 minQuestions

Introduction to Living Atlas Croatia

Objectives

Introduction to Living Atlas Croatia & Croatinan GBIF node

Introduction to Living Atlas Flanders (Belgium)

Presentation Living Atlas Croatia

Presentation Living Atlas Flanders (Belgium)

Key Points

Main concepts of a living Atlas project

Break

Overview

Teaching: min

Exercises: minQuestions

Objectives

Key Points

GBIF Policy & science

Overview

Teaching: 45 min

Exercises: 15 minQuestions

GBIF Science & Policy

Objectives

Understand how GBIF interacts with Science.

Understand how GBIF interacts with Policy.

1: GBIF & Science

Exercise 1 : GBIF and Science

- How many citations for the Meditera3 dataset published by the University of Zagreb Faculty of Science?

- This dataset have been reused in one thesis. Which one?

- In the Science Review topics, which ones are of interest for you?

Solution

- 11 citations

- Decreases over time in shannon diversity of land snails by Hmming J.

- The covered topics of the Science Review are : AGRICULTURE, BIODIVERSITY SCIENCE , BIOGEOGRAPHY , CITIZEN SCIENCE , CLIMATE CHANGE , CONSERVATION, DATA MANAGEMENT, DNA , ECOLOGY, ECOSYSTEM SERVICES, EVOLUTION, FRESHWATER, HUMAN HEALTH, MARINE, PHYLOGENETICS, SPECIES DISTRIBUTION, TAXONOMY & INVASIVES.

2: GBIF & CBD

Exercise 2 : GBIF and CBD

- Does GBIF plays an official role in the CBD? Which role?

- Are GBIF data relevant to GBF targets?

- Which Ad-Hoc Technical Expert Group includes GBIF staff?

Solution

- Yes, GBIF is observer as Inter-Governmental Organization

- Yes, definitely

- AHTEG on Indicators

3: Delivering relevant data

Exercise 3 : Delivering relevant data

- Is GBIF data reuse by Science?

- Is GBIF data reuse by Policy?

Solution

- Yes! see Science Review

- Yes! eg CBD, IPBES and Impact Assessments

4: Science Policy Interface

Exercise 4 : Science Policy Interface

- Does GBIF supports national biodiversity commitments?

- Does GBIF supports Science Policy Interface?

- Is this done by the nodes and/or the Secretariat?

Solution

- Yes

- Yes

- Both

Key Points

GBIF data reuse by scientists

GBIF Science review

GBIF interaction with CBD

Delivering relevant data

Science Policy Interface

GBIF Discussion

Overview

Teaching: 0 min

Exercises: 60 minQuestions

Objectives

Group discussion

Discussion

What challenges are next in relation to:

- Data Quality: Discuss the challenges and strategies for ensuring the quality of biodiversity data in GBIF. How can we address issues such as incomplete or inaccurate data?

Quality is important, and data should be verified (AI could help in some parts, but in the end you need well trained AI. Specialists are still very needed. Especially for difficult species. Imprtant shoul be the versioning of the verification of data . Spatial visualisation is important, and in the contract shoud be notad that data publication (quality data should be published)

- Data Access and Use: Explore the various ways researchers, policymakers, and the public can access and utilize the data available through GBIF. How can we maximize the impact of this data for conservation and research?

Maximizing the use of data. There is the need to improve the data coverage (geographically and also by environmental conditions.) Gap analysis…. Environmental variables. Support and collaborate with the for example Biodiversa+ and maximase the use of the data.

- Data Sharing and Collaboration: Discuss the importance of collaboration among institutions and countries in sharing biodiversity data through GBIF. How can we encourage more participation and data sharing?

Many advantages in sharing data to GBIF. Your work is visible for science and policy. New collaborations. It avoids (in some cases) repetition of collection. BEtter planning of data collection and avoid mistakes that were done in the past. Data what you share are already prepared and standardized…. Collaboration- A node is a good thing. More participation: more visibility to datasets and make them more usable. (small grants for institutions for data mobilization & digitizing)

- Technological Advances: Consider how technological advancements such as machine learning, remote sensing, and DNA sequencing are shaping the future of biodiversity data collection and analysis within GBIF.

We need to make more integration between BOLD and GBIF. Good thing they they are working on that!!

- Data Privacy and Security: Address concerns around data privacy and security within GBIF, particularly regarding sensitive species or locations. How can we balance open access with the need to protect sensitive information?**

Already addressed today and before. Privacy concers (is GDPR an issue , or maybe not :) ). Name and surname should be provided. Locations for sensitive species can be blurred, **species blurring is not a good thing!!!

-

Capacity Building: Explore opportunities for capacity building initiatives to empower researchers, particularly in developing countries, to contribute to and utilize biodiversity data through GBIF.

Recognize this recognition for GBIF in the citation. Use of bibliographicCitation in DwC should be promoted. In a call, an obligation for sharing data could be mantioned. If you publish in scientific papers before sharing with GBIF, you shoul make sure the connection is made More Workshops

-

Monitoring and Assessment: Discuss how biodiversity data from GBIF can be used for monitoring changes in biodiversity over time and assessing the effectiveness of conservation efforts.

-

Future Directions: Consider the future directions of GBIF and the role it can play in addressing global challenges such as climate change, habitat loss, and species extinction.

Discussion challenge

Choose a topic to discuss in your group. 30 minutes for group discussion. 30 minutes for reporting back to the room. 5-6 persons per group.

Solution

Report back to the room on your group discussion

Key Points

How GBIF works

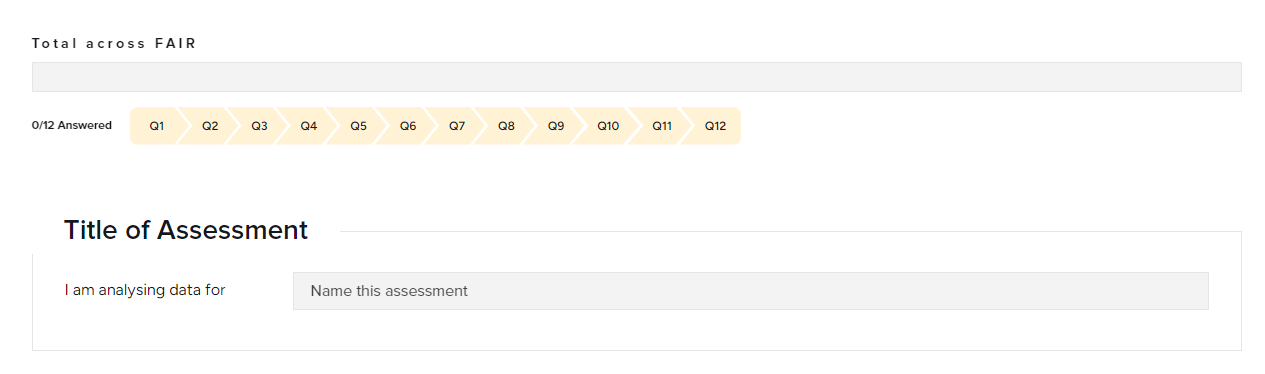

FAIR and Open Science

Overview

Teaching: 45 min

Exercises: 0 minQuestions

What is open science

A new research life cycle?

FAIR data?

Objectives

Undersctand the principles of Open Science

Understand how FAIR data works.

Understand how FAIR data is organized.

Presentation

Excercise : FAIR data & Open Science

- What is the difference between FAIR and OPEN data?

- Check the FAIR Self assessment tool here Think about a dataset you know and run over the assessment

- What could you do to make your data more FAIR?

- Is data published through GBIF FAIR?

- Is all data published by GBIF considered as open data?

SOLUTION

- FAIR data is not always open, FAIR data is findable and good documented. Open data per definition is not always FAIR. (Just an Excel somewhere on a website is considered as open data)

- Publish your data in GBIF or in another open repository like Zenodo

- YES

- No, CC-BY-NC is not considered as open data

Presentation

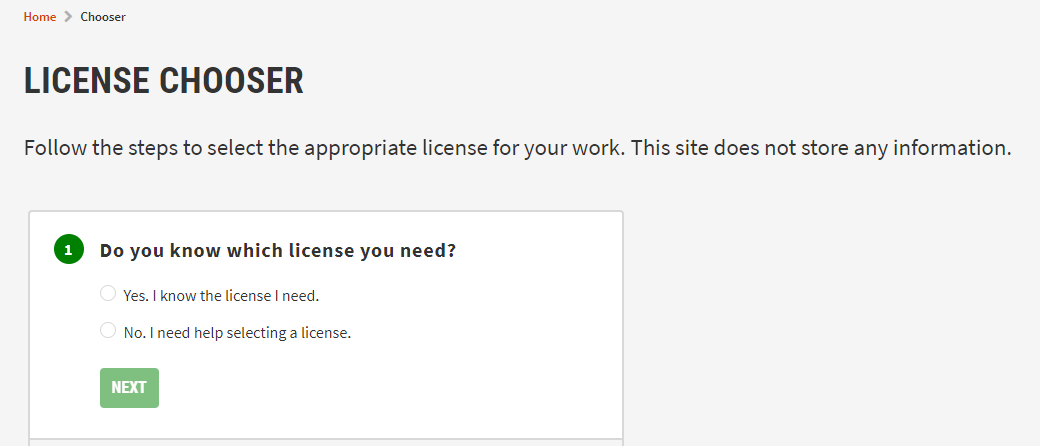

Excercise : Creative commons license chooser

- Check the Creative commons license chooser

- Learn how to find an appropriate license for your biodiversity data

- Is this license alowed for GBIF?

- Is CC-BY-NC an open data license?

SOLUTION

- Check the license chooser

- The only licenses allowed for GBIF are CC0 ; CC_BY ; and CC_BY_NC

- CC-BY-NC is not considered as an open data license

Key Points

Open Science & FAIR data

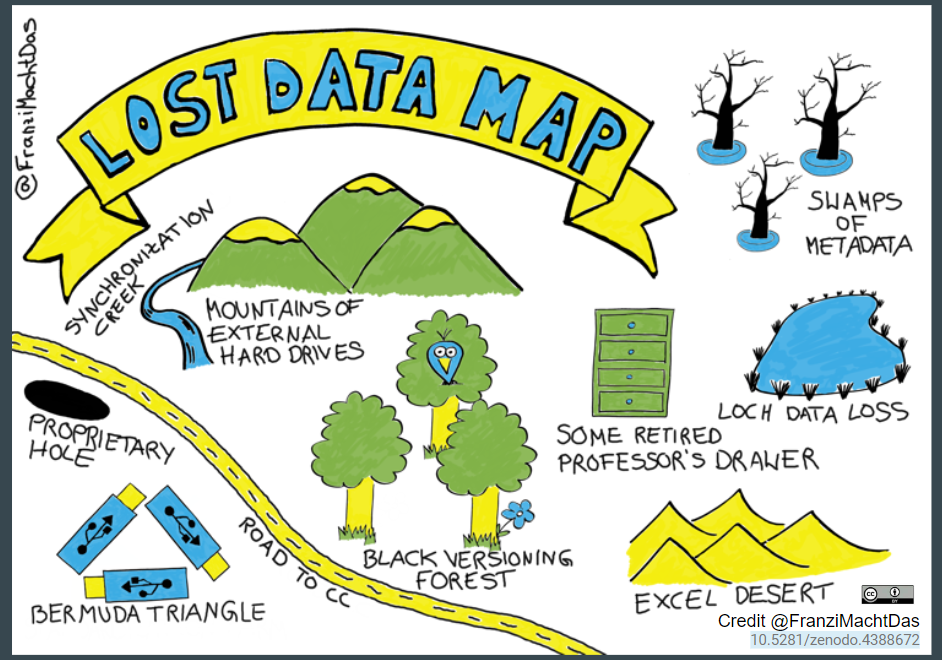

Data management & best practices

Overview

Teaching: 30 min

Exercises: 15 minQuestions

How to deal with data?

Tips & tricks for good data management

What is Biodiversity informatics?

What is a Biodiversity dataset?

Objectives

Introduction to data management plan

Introduction to Biodiversity informatics

Feel familiar with open data types

Distinction between data quality and fitness for use

Make sure your data are tidy data

Learn some best practices

Presentation

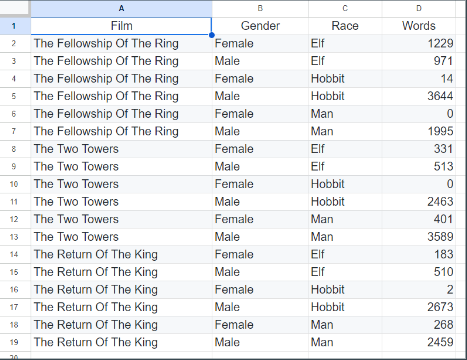

Exercise

Challenge: Make this data tidy.

- Download this SAMPLE_DATE

- Open in spreadsheet programme (Excel, LibreOffice, Openoffice,….)

- Make this data Tidy (Each variable forms a column and contains values, Each observation forms a row, Each type of observational unit forms a table) Open this link for the complete excercise and tips

Solution

Key Points

Best data management practices

Organize your Data and Metadata

Coffee Break

Overview

Teaching: min

Exercises: minQuestions

Objectives

Key Points

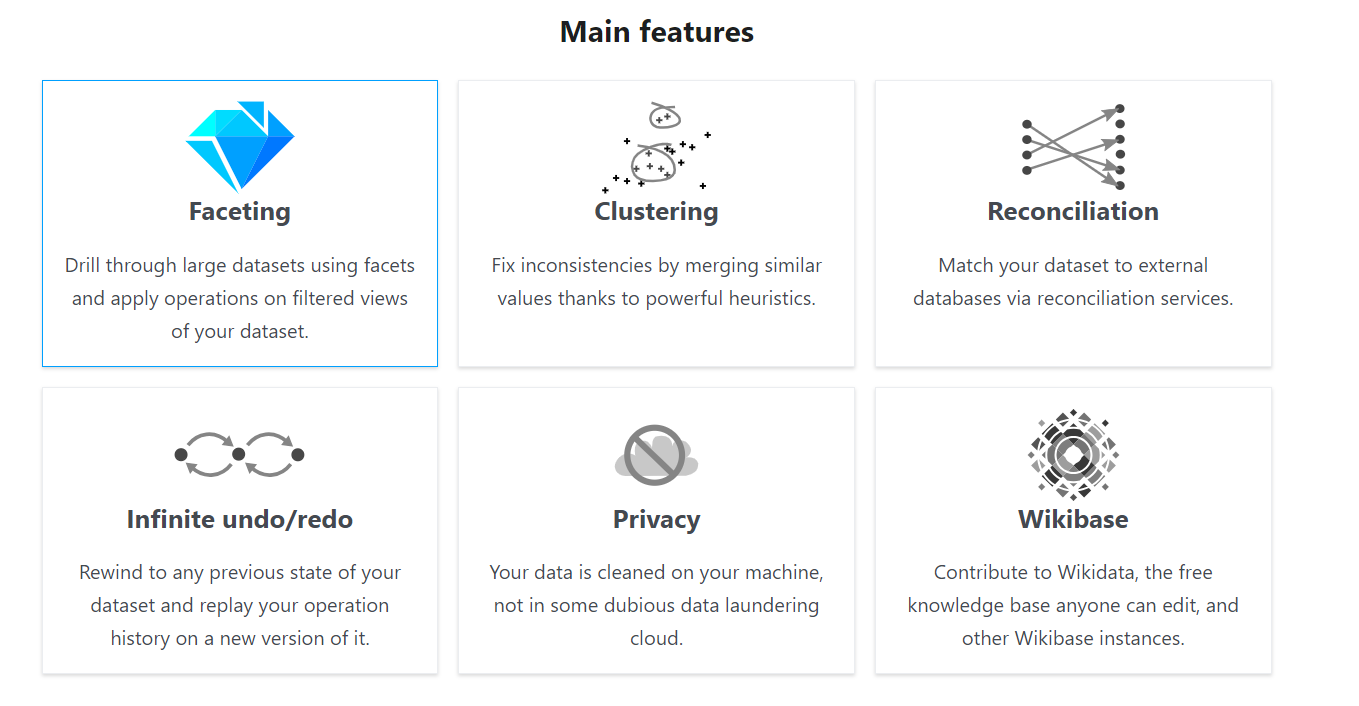

Openrefine

Overview

Teaching: 0 min

Exercises: 90 minQuestions

What is Openrefine? an introduction

Data cleaning with open refine?

Name matching with Openrefine

Objectives

Understand the purpose of Openrefine

Openrefine

Presentation

You can find the complete user manual here

Excercise : Openrefine

- Complete this exercise

SOLUTION

- follow the guidelines in the tutorial document

Key Points

Openrefine saves time

Lunch Break

Overview

Teaching: min

Exercises: minQuestions

Objectives

Key Points

Openrefine part 2

Overview

Teaching: 0 min

Exercises: 90 minQuestions

What is Openrefine? an introduction

Data cleaning with open refine?

Name matching with Openrefine

Objectives

Understand the purpose of Openrefine

Openrefine

Presentation

Excercise : Openrefine

- Finish this exercise

SOLUTION

- follow the guidelines in the document

Excercise : Openrefine Extra exercice

The GLobal names veriefier gives you the opportunity to check your names with numerous sources. 209 Checklists are used for this service.

- On your column ‘scientificName’ Go to reconciliation services -> reconcile –> start reconciling

- Click on ‘add reconciliation service’ and fill in “https://verifier.globalnames.org/api/v1/reconcile”

- Click on Globalnames

- Click on start reconciling

- Click reconcile –> facets –> ‘choose your way of judgement’

SOLUTION

- After reconciliation your names are matched

- More information on this service here

Key Points

Openrefine saves time

SQLite

Overview

Teaching: 0 min

Exercises: 90 minQuestions

Data cleaning with SQLite

Objectives

Understand how SQLite can help cleaning data

Presentation: SQLite

Exercise 1 : Download from GBIF.org

Instructions

- Select at least one of the use cases

- Follow the use case dataset links:

- Click on the occurrences button

- On the left panel, filter by CountryOrArea

- How many occurrences to you see for Croatia?

- ⬇️ Download in simple CSV format

- Open the downloaded file with a text editor

Exercise 2 : Import data

Instructions

- Open the DBrowser application

- Create a new empty database

- Import the GBIF downloaded data into an SQL table named ‘occ’

- How many records do you have?

- Save your database

Exercise 3 : Explore data

Instructions

- (Re)Open your database with DBBrowser

- Do you ALWAYS have scientificName, date and coordinates?

- How complete are the data? (describe)

- Put special attention to individualCount, taxonRank, coordinatesUncertainty, license, issues fields

- Are all records suitable for your study(fitness for use)? Explain why?

- Would you filter out some data? Explain why?

Exercice 4 : Discard data

Instructions

- Do you have absence data? (see occurrenceStatus field)

- Discard absence data

- Create a trusted view to eliminate absence data and data with taxonRank different from SPECIES

- How many records do you have in this trusted view?

Exercice 5 : Filter data

Instructions

- Do you have data without coordinatesUncertaintyInMeters?

- Do you have data with coordinates uncertainty > 10 km?

- Update your trusted view to filter out these records

- Select only these fields in your view:

- scientificName, Date, coordinates, uncertainty and occurrenceID

- How many records do you have now?

Exercice 6 : Annotate data

Instructions

- IndividualCound is not a mandatory field, set it to 1 when null

- Add a withMedia field, set it to True when mediaType is not null

- Add these two fields to your trusted view

- Export the trusted view results in a CSV file

- (Now you are ready to merge this online data with your own data)

Solutions

If needed, see the solutions page.

Key Points

SQL can be very useful to clean your data

Views are great to filter the records and fields you want to keep without changing your original data

Store your SQL statements under Git

SQL statements are easy to understand, sustainable and reusable

Coffee Break

Overview

Teaching: min

Exercises: minQuestions

Objectives

Key Points

SQLite

Overview

Teaching: 0 min

Exercises: 90 minQuestions

Data cleaning with SQLite

Objectives

Understand how SQLite can help cleaning data

Presentation: SQLite

Exercise 1 : Download from GBIF.org

Instructions

- Select at least one of the use cases

- Follow the use case dataset links:

- Click on the occurrences button

- On the left panel, filter by CountryOrArea

- How many occurrences to you see for Croatia?

- ⬇️ Download in simple CSV format

- Open the downloaded file with a text editor

Solution 1

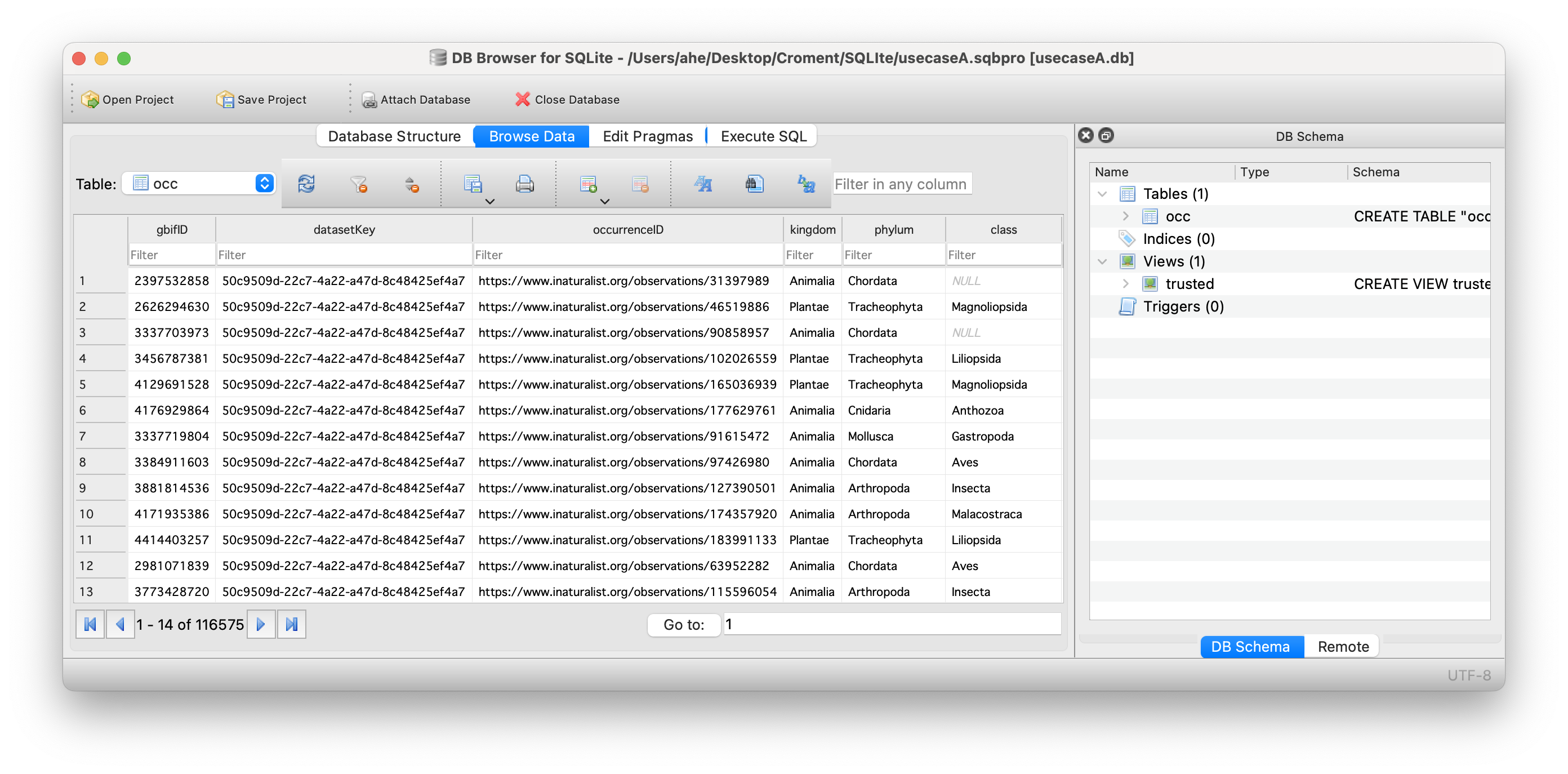

- Your downloads should looks like this:

- A. GBIF Download (116,575 occurrences)

- B. GBIF Download (15,077 occurrences)

- C. GBIF Download (13,668 occurrences)

- D. GBIF Download (9,723 occurrences)

Exercise 2 : Import data

Instructions

- Open the DBrowser application

- Create a new empty database

- Import the GBIF downloaded data into an SQL table named ‘occ’

- How many records do you have?

- Save your database

Solution 2

select count(*) from occ;

Exercise 3 : Explore data

Instructions

- (Re)Open your database with DBBrowser

- Do you ALWAYS have scientificName, date and coordinates?

- How complete are the data? (describe)

- Put special attention to individualCount, taxonRank, coordinatesUncertainty, license, issues fields

- Are all records suitable for your study(fitness for use)? Explain why?

- Would you filter out some data? Explain why?

Solution 3

select * from occ where scientificName is null;

select * from occ where eventdate is null;

select * from occ where year is null or month is null or day is null;

select * from occ where decimalLatitude is null or decimalLongitude is null;

select count(*) from occ where individualCount is null;

select taxonRank, count(*) from occ group by taxonRank;

select phylum, count(*) from occ group by phylum;

select license, count(*) from occ group by license;

Exercice 4 : Discard data

Instructions

- Do you have absence data? (see occurrenceStatus field)

- Discard absence data

- Create a trusted view to eliminate absence data and data with taxonRank different from SPECIES

- How many records do you have in this trusted view?

Solution 4

select count(*) from occ where occurrenceStatus='ABSENT';

create view trusted as select * from occ where occurrenceStatus='PRESENT' and taxonRank='SPECIES';

select count(*) from trusted;

Exercice 5 : Filter data

Instructions

- Do you have data without coordinatesUncertaintyInMeters?

- Do you have data with coordinates uncertainty > 10 km?

- Update your trusted view to filter out these records

- Select only these fields in your view:

- scientificName, Date, coordinates, uncertainty and occurrenceID

- How many records do you have now?

Solution 5

select count(*) from occ where coordinateUncertaintyInMeters is null;

select coordinateUncertaintyInMeters, count(*) from occ group by coordinateUncertaintyInMeters;

select * from occ where CAST(coordinateUncertaintyInMeters as INTEGER) > 10000;

drop view if exists trusted ;

create view trusted as select scientificName, year,month,day,decimalLatitude, decimalLongitude, CAST(coordinateUncertaintyInMeters as INTEGER) as uncertainty, occurrenceID from occ where occurrenceStatus='PRESENT' and taxonRank='SPECIES' and uncertainty <= 10000;

select count(*) from trusted;

select eventdate, strftime('%d',eventdate) as day, strftime('%m',eventdate) as month, strftime('%Y', eventdate) as year from occ;

Exercice 6 : Annotate data

Instructions

- IndividualCound is not a mandatory field, set it to 1 when null

- Add a withMedia field, set it to True when mediaType is not null

- Add these two fields to your trusted view

- Export the trusted view results in a CSV file

- (Now you are ready to merge this online data with your own data)

Solution 6

update occ set individualCount=1 where individualCount is null;

drop view if exists trusted ;

create view trusted as select scientificName, year,month,day,decimalLatitude, decimalLongitude, CAST(coordinateUncertaintyInMeters as INTEGER) as uncertainty, occurrenceID, individualCount, mediaType is not null as withMedia from occ where occurrenceStatus='PRESENT' and taxonRank='SPECIES' and uncertainty <= 10000;

Key Points

SQL can be very useful to clean your data

Views are great to filter the records and fields you want to keep without changing your original data

Store your SQL statements under Git

SQL statements are easy to understand, sustainable and reusable

Discussion on data publication

Overview

Teaching: 30 min

Exercises: 0 minQuestions

Do you ready to publish your biodiversity data in an open way

What are your concerns

What do you want to change

Objectives

Discussion on the principles of Open Science

Discussion on FAIR data.

Discussion on data publication

Become a data publisher for GBIF

The Endorsement procedure

The endorsement procedure aims to ensure that:

- Published data are relevant to GBIF’s scope and objectives

- Arrangements for data hosting are stable and persistent

- Data publishing and use are supported by strong national, regional and thematic engagement

- Data are as open as possible and available for sharing and reuse

[https://www.gbif.org/become-a-publisher]’https://www.gbif.org/become-a-publisher)

Discussion on data publication

- Data Publishing Challenges: What are your personal thresholds in relation to Biodiversity Data Publication? How would you make your data acceptable for publication.

Writing a data policy for an institution for 81+ scientists is a difficult assignement.

- Data Licensing and Usage Policies:: Are you willing to publish unde CC0 - CC-BY or CC-BY-NC?

Not in favour of CC0, discussion on CC-BY and CC-BY-NC , CC-BY might be an option. CC-BY-NC. CC-BY vr CC-NC discussion

- Your Institutes view: What is the position of your institute on open Biodiversity data publication?

Anyone in general is “pro’ open data publication, but questions arise… Are they willing to do this, the answer can be different for eacht person. PPNEA –> dealing with the donors of the data is also an issue. In many cases it is not only the institute or researchers who decide. Different type of data, some will be open availabe, some are sensitive (there are different cases)…. Also need to interact with 3rd parties.

- Impact and Outcomes: Reflect on the impact and outcomes of data publication through GBIF. How can the availability of biodiversity data through GBIF contribute to scientific research, conservation efforts, and policymaking? Data literacy is needed. In Croatia biodiversity data and nature protection area, open data are in the process and leading. Talk to persons or talk also to the institutions. Create obligations….?

Not a lot of discussion needed, it a facht (no opinion). Also think about the sensitive data. (open or aggregated?) Open data awareness raiing is required and also in education.

Discussion challenge

Choose a topic to discuss in your group. 30 minutes for group discussion. 30 minutes for reporting back to the room. 5-6 persons per group.

Solution

Report back to the room on your group discussion

Key Points

Open Science & FAIR data

Introduction to Darwin Core

Overview

Teaching: 30 min

Exercises: 40 minQuestions

What is Darwin Core?

What is a Darwin Core Archive?

Why do people use Darwin Core for their data?

What are the required Darwin Core terms for sharing to GBIF?

Objectives

Understand the purpose of Darwin Core.

Understand how to map data to Darwin Core.

Plan for mapping to Darwin Core.

Presentation

Darwin Core - A global community of data sharing and integration

Darwin Core is a data standard to mobilize and share biodiversity data. Over the years, the Darwin Core standard has expanded to enable exchange and sharing of diverse types of biological observations from citizen scientists, ecological monitoring, eDNA, animal telemetry, taxonomic treatments, and many others. Darwin Core is applicable to any observation of an organism (scientific name, OTU, or other methods of defining a species) at a particular place and time. In Darwin Core this is an occurrence. To learn more about the foundations of Darwin Core read Wieczorek et al. 2012.

Demonstrated Use of Darwin Core

The power of Darwin Core is most evident in the data aggregators that harvest data using that standard. The one we will refer to most frequently in this workshop is Global Biodiversity Information Facility (learn more about GBIF). Another prominent one is the Ocean Biodiversity Information System (learn more about OBIS) . It’s also used by the Atlas of Living Australia, iDigBio, among others.

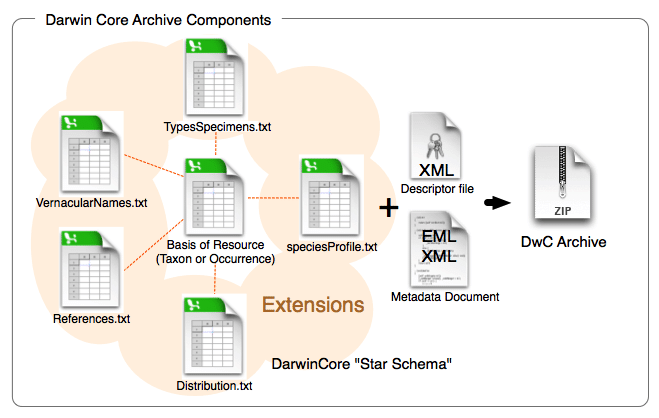

Darwin Core Archives

Darwin Core Archives are what OBIS and GBIF harvest into their systems. Fortunately the software created and maintained by GBIF, the Integrated Publishing Toolkit, produces Darwin Core Archives for us. Darwin Core Archives are pretty simple. It’s a zipped folder containing the data (one or several files depending on how many extensions you use), an Ecological Metadata Language (EML) XML file, and a meta.xml file that describes what’s in the zipped folder.

Exercise

Challenge: Download this Darwin Core Archive and examine what’s in it. Did you find anything unusual or that you don’t understand what it is?

Solution

dwca-tpwd_harc_texasaransasbay_bagseine-v2.3 |-- eml.xml |-- event.txt |-- extendedmeasurementorfact.txt |-- meta.xml |-- occurrence.txt

Darwin Core Mapping

Now that we understand a bit more about why Darwin Core was created and how it is used today we can begin the work of mapping data to the standard. The key resource when mapping data to Darwin Core is the Darwin Core Quick Reference Guide. This document provides an easy-to-read reference of the currently recommended terms for the Darwin Core standard. There are a lot of terms there and you won’t use them all for every dataset (or even use them all on any dataset) but as you apply the standard to more datasets you’ll become more familiar with the terms.

Tip

If your raw column headers are Darwin Core terms verbatim then you can skip this step! Next time you plan data collection use the standard DwC term headers!

Exercise

Challenge: Find the matching Darwin Core term for these column headers.

- SAMPLE_DATE (example data: 09-MAR-21 05.45.00.000000000 PM)

- lat (example data: 32.6560)

- depth_m (example data: 6 meters)

- COMMON_NAME (example data: staghorn coral)

- percent_cover (example data: 15)

- COUNT (example data: 2 Females)

Solution

eventDatedecimalLatitudeminimumDepthInMetersandmaximumDepthInMetersvernacularNameorganismQuantityandorganismQuantityType- This one is tricky- it’s two terms combined and will need to be split.

indvidualCountandsex

Tip

To make the mapping step easier on yourself, we recommend starting a mapping document/spreadsheet (or document it as a comment in your script). List out all of your column headers in one column and document the appropriate Dawin Core term(s) in a second column. For example:

my term DwC term lat decimalLatitude date eventDate species scientificName

What are the required Darwin Core terms for publishing to GBIF?

When doing your mapping some required information may be missing. Below are the Darwin Core terms that are required to share your data to OBIS plus a few that are needed for GBIF.

| Darwin Core Term | Definition | Comment | Example |

|---|---|---|---|

occurrenceID |

An identifier for the Occurrence (as opposed to a particular digital record of the occurrence). In the absence of a persistent global unique identifier, construct one from a combination of identifiers in the record that will most closely make the occurrenceID globally unique. | To construct a globally unique identifier for each occurrence you can usually concatenate station + date + scientific name (or something similar) but you’ll need to check this is unique for each row in your data. It is preferred to use the fields that are least likely to change in the future for this. For ways to check the uniqueness of your occurrenceIDs see the QA / QC section of the workshop. | Station_95_Date_09JAN1997:14:35:00.000_Atractosteus_spatula |

basisOfRecord |

The specific nature of the data record. | Pick from these controlled vocabulary terms: HumanObservation, MachineObservation, MaterialSample, PreservedSpecimen, LivingSpecimen, FossilSpecimen | HumanObservation |

scientificName |

The full scientific name, with authorship and date information if known. When forming part of an Identification, this should be the name in lowest level taxonomic rank that can be determined. This term should not contain identification qualifications, which should instead be supplied in the identificationQualifier term. |

Note that cf., aff., etc. need to be parsed out to the identificationQualifier term. For a more thorough review of identificationQualifier see this paper. |

Atractosteus spatula |

eventDate |

The date-time or interval during which an Event occurred. For occurrences, this is the date-time when the event was recorded. Not suitable for a time in a geological context. | Must follow ISO 8601. See more information on dates in the Data Cleaning section of the workshop. | 2009-02-20T08:40Z |

decimalLatitude |

The geographic latitude (in decimal degrees, using the spatial reference system given in geodeticDatum) of the geographic center of a Location. Positive values are north of the Equator, negative values are south of it. Legal values lie between -90 and 90, inclusive. | For OBIS and GBIF the required geodeticDatum is WGS84. Uncertainty around the geographic center of a Location (e.g. when sampling event was a transect) can be recorded in coordinateUncertaintyInMeters. See more information on coordinates in the Data Cleaning section of the workshop. |

-41.0983423 |

decimalLongitude |

The geographic longitude (in decimal degrees, using the spatial reference system given in geodeticDatum) of the geographic center of a Location. Positive values are east of the Greenwich Meridian, negative values are west of it. Legal values lie between -180 and 180, inclusive | For OBIS and GBIF the required geodeticDatum is WGS84. See more information on coordinates in the Data Cleaning section of the workshop. |

-121.1761111 |

countryCode |

The standard code for the country in which the location occurs. | Use an ISO 3166-1-alpha-2 country code. Not required for OBIS but GBIF prefers to have this for their system. For international waters, leave blank. | US, MX, CA |

kingdom |

The full scientific name of the kingdom in which the taxon is classified. | Not required for OBIS but GBIF needs this to disambiguate scientific names that are the same but in different kingdoms. | Animalia |

geodeticDatum |

The ellipsoid, geodetic datum, or spatial reference system (SRS) upon which the geographic coordinates given in decimalLatitude and decimalLongitude as based. | Must be WGS84 for data shared to OBIS and GBIF but it’s best to state explicitly that it is. | WGS84 |

What other terms should be considered?

While these terms are not required for publishing data to GBIF, they are extremely helpful for downstream users because without them the data are less useful for future analyses. For instance, depth is a crucial piece of information for marine observations, but it is not always included. For the most part the ones listed below are not going to be sitting there in the data, so you’ll have to determine what the values should be and add them in. Really try your hardest to include them if you can.

| Darwin Core Term | Definition | Comment | Example |

|---|---|---|---|

coordinateUncertaintyInMeters |

The horizontal distance (in meters) from the given decimalLatitude and decimalLongitude describing the smallest circle containing the whole of the Location. Leave the value empty if the uncertainty is unknown, cannot be estimated, or is not applicable (because there are no coordinates). Zero is not a valid value for this term | There’s always uncertainty associated with locations. Recording the uncertainty is crucial for downstream analyses. | 15 |

occurrenceStatus |

A statement about the presence or absence of a Taxon at a Location. | For GBIF & OBIS, only valid values are present and absent. |

present |

samplingProtocol |

The names of, references to, or descriptions of the methods or protocols used during an Event. | Bag Seine | |

taxonRank |

The taxonomic rank of the most specific name in the scientificName. | Also helps with disambiguation of scientific names. | Species |

organismQuantity |

A number or enumeration value for the quantity of organisms. | OBIS and GBIF also likes to see this in the Extended Measurement or Fact extension. | 2.6 |

organismQuantityType |

The type of quantification system used for the quantity of organisms. | Relative Abundance | |

datasetName |

The name identifying the data set from which the record was derived. | TPWD HARC Texas Coastal Fisheries Aransas Bag Bay Seine | |

dataGeneralizations |

Actions taken to make the shared data less specific or complete than in its original form. Suggests that alternative data of higher quality may be available on request. | This veers somewhat into the realm of metadata and will not be applicable to all datasets but if the data were modified such as due to sensitive species then it’s important to note that for future users. | Coordinates generalized from original GPS coordinates to the nearest half degree grid cell |

informationWithheld |

Additional information that exists, but that has not been shared in the given record. | Also useful if the data have been modified this way for sensitive species or for other reasons. | location information not given for endangered species |

institutionCode |

The name (or acronym) in use by the institution having custody of the object(s) or information referred to in the record. | TPWD |

Other than these specific terms, work through the data that you have and try to crosswalk it to the Darwin Core terms that match best.

Exercise

Challenge: Create some crosswalk notes for your dataset.

Compare your data files to the table(s) above to devise a plan to crosswalk your data columns into the DwC terms.

Key Points

Darwin Core isn’t difficult to apply, it just takes a little bit of time.

Using Darwin Core allows datasets from across projects, organizations, and countries to be integrated together.

Applying certain general principles to the data will make it easier to map to Darwin Core.

Implementing Darwin Core makes data FAIR-er and means becoming part of a community of people working together to understand species no matter where they work or are based.

Coffee Break

Overview

Teaching: min

Exercises: minQuestions

Objectives

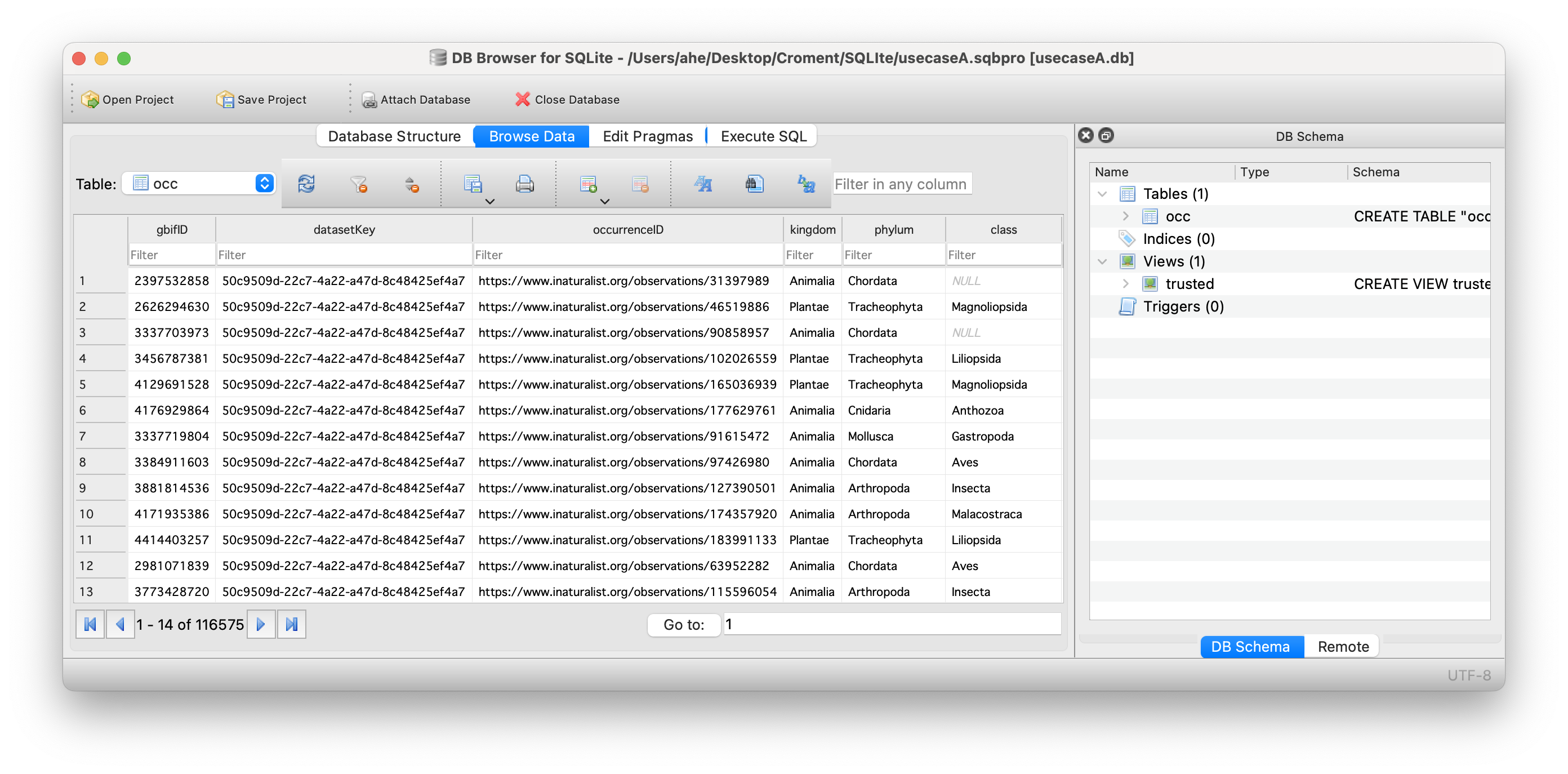

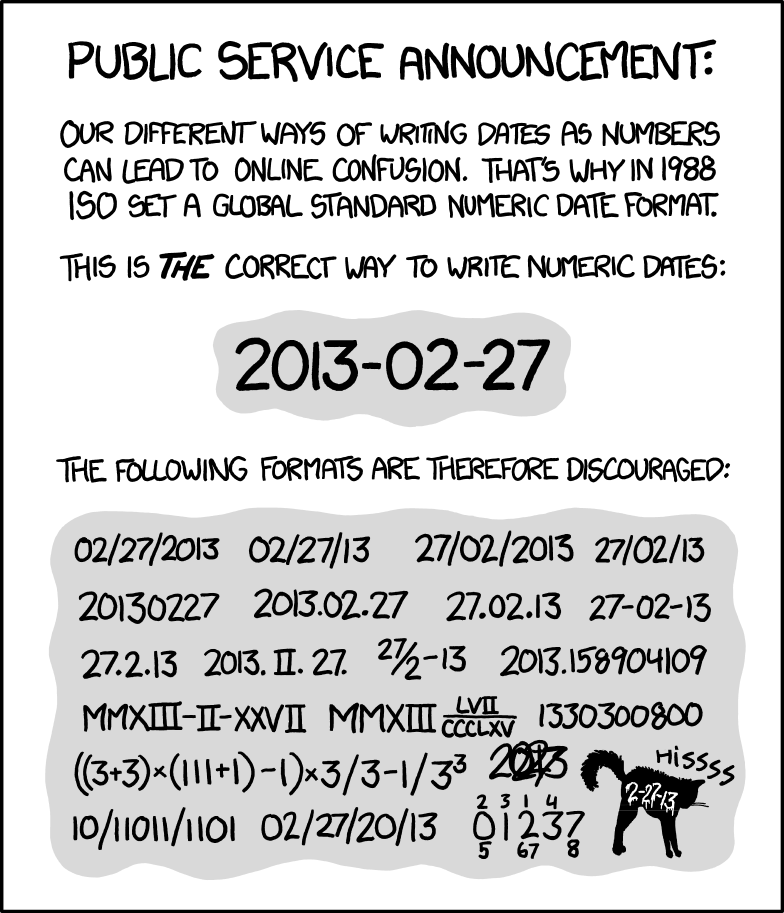

Image credit: xkcd

Image credit: xkcd

Key Points

Data Cleaning for Darwin Core

Overview

Teaching: 0 min

Exercises: 120 minQuestions

How to convert dates to ISO?

How to match scientific names to GBIF?

How to convert latitudes and longitudes to decimal degrees?

Objectives

Aligning dates to the ISO 8601 standard.

Matching scientific names

Converting latitude and longitude variations to decimal degrees North and East.

Some usefull links:

Now that you know what the mapping is between your raw data and the Darwin Core standard, it’s time to start cleaning up the data to align with the conventions described in the standard. The following activities are the three most common conversions a dataset will undergo to align to the Darwin Core standard:

- Ensuring dates follow the ISO 8601 standard

- Matching scientific names to an authoritative resource

- Ensuring latitude and longitude values are in decimal degrees

Python is a high-level, general-purpose programming language. Its design philosophy emphasizes code readability with the use of significant indentation. Make sure that Python is installed on your machine. You can download Python here: https://www.anaconda.com/ R is a language and environment for statistical computing and graphics. The core R language is augmented by a large number of extension packages, containing reusable code, documentation, and sample data. You can download R & Rstudio (visual interface) here: https://posit.co/download/rstudio-desktop/

Below is a short summary of each of those conversions as well as some example conversion scripts. The exercises are intended to give you a sense of the variability we’ve seen in datasets and how we went about converting them. While the examples use the pandas package for Python and the tidyverse collection of packages for R (in particular the lubridate package), those are not the only options for dealing with these conversions but simply the ones we use more frequently in our experiences.

Getting your dates in order

Dates can be surprisingly tricky because people record them in many different ways. For our purposes we must follow

ISO 8601 which means using a four digit year, two digit month, and two digit

day with dashes as separators (i.e. YYYY-MM-DD). You can also record time in ISO 8601 but make sure to include the time

zone which can also get tricky if your data take place across time zones and throughout the year where daylight savings

time may or may not be in effect (and start and end times of daylight savings vary across years). There are packages in

R and Python that can help you with these vagaries. Finally, it is possible to record time intervals in ISO 8601 using a

slash (e.g. 2022-01-02/2022-01-12). Examine the dates in your data to determine what format they are following and what

amendments need to be made to ensure they are following ISO 8601. Below are some examples and solutions in Python and R

for them.

ISO 8601 dates can represent moments in time at different resolutions, as well as time intervals, which use “/” as a separator. Date and time are separated by “T”. Timestamps can have a time zone indicator at the end. If not, then they are assumed to be local time. When a time is UTC, the letter “Z” is added at the end (e.g. 2009-02-20T08:40Z, which is the equivalent of 2009-02-20T08:40+00:00).

Tip

Focus on getting your package of choice to read the dates appropriately. While you can use regular expressions to replace and substitute strings to align with the ISO convention, it will typically save you time if you work in your package of choice to translate the dates.

| Darwin Core Term | Description | Example |

|---|---|---|

| eventDate | The date-time or interval during which an Event occurred. For occurrences, this is the date-time when the event was recorded. Not suitable for a time in a geological context. | 1963-03-08T14:07-0600 (8 Mar 1963 at 2:07pm in the time zone six hours earlier than UTC).2009-02-20T08:40Z (20 February 2009 8:40am UTC).2018-08-29T15:19 (3:19pm local time on 29 August 2018).1809-02-12 (some time during 12 February 1809).1906-06 (some time in June 1906).1971 (some time in the year 1971).2007-03-01T13:00:00Z/2008-05-11T15:30:00Z (some time during the interval between 1 March 2007 1pm UTC and 11 May 2008 3:30pm UTC).1900/1909 (some time during the interval between the beginning of the year 1900 and the end of the year 1909).2007-11-13/15 (some time in the interval between 13 November 2007 and 15 November 2007). |

Examples in Openrefine

When dealing with dates using Openrefine, there are a few base tricks that are useful to wrangle your dates in the correct format.

The examples below show how to use the

Openrefineand format your data to the ISO-8601 standard. Here is an overview of the Openrefine data functions. In openrefine code has to be entered here:

01/31/2021 17:00 GMT

Chooseedit cells, –>common transforms–>toDateChooseadd column based on this columnvalue.toDate('yyyy/mm/dd').toString('yyyy-MM-dd')If you have multiple date formats in one column.

value.toDate('MM/yy','MMM-yy').toString('yyyy-MM')“If parsing a date with text components in a language other than your system language you can specify a language code as the format1 argument. For example, a French language date such as “10 janvier 2023”.

value.toDate('fr','dd MMM yyyy')Another option is to split your date columns in 3 separate columns using the split function. After splitting join the columns in a data format code:

cells["year"].value + "-" +cells["month"].value + "-" + cells["day"].value

Examples in Python

When dealing with dates using pandas in Python it is best to create a Series as your time column with the appropriate datatype. Then, when writing your file(s) using .to_csv() you can specify the format which your date will be written in using the

date_formatparameter.The examples below show how to use the pandas.to_datetime() function to read various date formats. The process can be applied to entire columns (or Series) within a DataFrame.

01/31/2021 17:00 GMTThis date follows a typical date construct of

month/day/year24-hour:minutetime-zone. The pandas.to_datetime()function will correctly interpret these dates without theformatparameter.import pandas as pd df = pd.DataFrame({'date':['01/31/2021 17:00 GMT']}) df['eventDate'] = pd.to_datetime(df['date'], format="%m/%d/%Y %H:%M %Z") dfdate eventDate 01/31/2021 17:00 GMT 2021-01-31 17:00:00+00:00

31/01/2021 12:00 ESTThis date is similar to the first date but switches the

monthanddayand identifies a differenttime-zone. The construct looks likeday/month/year24-hour:minutetime-zoneimport pandas as pd df = pd.DataFrame({'date':['31/01/2021 12:00 EST']}) df['eventDate'] = pd.to_datetime(df['date'], format="%d/%m/%Y %H:%M %Z") dfdate eventDate 31/01/2021 12:00 EST 2021-01-31 12:00:00-05:00

January, 01 2021 5:00 PM GMTimport pandas as pd df = pd.DataFrame({'date':['January, 01 2021 5:00 PM GMT']}) df['eventDate'] = pd.to_datetime(df['date'],format='%B, %d %Y %I:%M %p %Z') dfdate eventDate January, 01 2021 5:00 PM GMT 2021-01-01 17:00:00+00:00

1612112400in seconds since 1970This uses the units of

seconds since 1970which is common when working with data in netCDF.import pandas as pd df = pd.DataFrame({'date':['1612112400']}) df['eventDate'] = pd.to_datetime(df['date'], unit='s', origin='unix') dfdate eventDate 1612112400 2021-01-31 17:00:00

44227.708333333333This is the numerical value for dates in Excel because Excel stores dates as sequential serial numbers so that they can be used in calculations. In some cases, when you export an Excel spreadsheet to CSV, the dates are preserved as a floating point number.

import pandas as pd df = pd.DataFrame({'date':['44227.708333333333']}) df['eventDate'] = pd.to_datetime(df['date'].astype(float), unit='D', origin='1899-12-30') dfdate eventDate 44227.708333333333 2021-01-31 17:00:00.000000256Observations with a start date of

2021-01-30and an end date of2021-01-31.Here we store the date as a duration following the ISO 8601 convention. In some cases, it is easier to use a regular expression or simply paste strings together:

import pandas as pd df = pd.DataFrame({'start_date':['2021-01-30'], 'end_date':['2021-01-31']}) df['eventDate'] = df['start_time']+'/'+df['end_time'] dfstart_time end_time eventDate 2021-01-30 2021-01-31 2021-01-30/2021-01-31

Examples in R

When dealing with dates using R, there are a few base functions that are useful to wrangle your dates in the correct format. An R package that is useful is lubridate, which is part of the

tidyverse. It is recommended to bookmark this lubridate cheatsheet.The examples below show how to use the

lubridatepackage and format your data to the ISO-8601 standard.

01/31/2021 17:00 GMTlibrary(lubridate) date_str <- '01/31/2021 17:00 GMT' lubridate::mdy_hm(date_str,tz="UTC") date <- lubridate::format_ISO8601(date) # Separates date and time with a T. date <- paste0(date, "Z") # Add a Z because time is in UTC.[1] "2021-01-31T17:00:00Z"

31/01/2021 12:00 ESTlibrary(lubridate) date_str <- '31/01/2021 12:00 EST' date <- lubridate::dmy_hm(date_str,tz="EST") lubridate::with_tz(date,tz="UTC") date <- lubridate::format_ISO8601(date) date <- paste0(date, "Z")[1] "2021-01-31T17:00:00Z"

January, 01 2021 5:00 PM GMTlibrary(lubridate) date_str <- 'January, 01 2021 5:00 PM GMT' date <- lubridate::mdy_hm(date_str, format = '%B, %d %Y %H:%M', tz="GMT") lubridate::with_tz(date,tz="UTC") lubridate::format_ISO8601(date) date <- paste0(date, "Z")[1] "2021-01-01T17:00:00Z"

1612112400in seconds since 1970This uses the units of

seconds since 1970which is common when working with data in netCDF.library(lubridate) date_str <- '1612112400' date_str <- as.numeric(date_str) date <- lubridate::as_datetime(date_str, origin = lubridate::origin, tz = "UTC") date <- lubridate::format_ISO8601(date) date <- paste0(date, "Z") print(date)[1] "2021-01-31T17:00:00Z"

44227.708333333333This is the numerical value for dates in Excel because Excel stores dates as sequential serial numbers so that they can be used in calculations. In some cases, when you export an Excel spreadsheet to CSV, the dates are preserved as a floating point number.

library(openxlsx) library(lubridate) date_str <- 44227.708333333333 date <- as.Date(date_str, origin = "1899-12-30") # If you're only interested in the YYYY-MM-DD fulldate <- openxlsx::convertToDateTime(date_str, tz = "UTC") fulldate <- lubridate::format_ISO8601(fulldate) fulldate <- paste0(fulldate, "Z") print(date) print(fulldate)[1] "2021-01-31" [1] "2021-01-31T17:00:00Z"Observations with a start date of

2021-01-30and an end date of2021-01-31. For added complexity, consider adding in a 4-digit deployment and retrieval time.Here we store the date as a duration following the ISO 8601 convention. In some cases, it is easier to use a regular expression or simply paste strings together:

library(lubridate) event_start <- '2021-01-30' event_finish <- '2021-01-31' deployment_time <- 1002 retrieval_time <- 1102 Time is recorded numerically (1037 instead of 10:37), so need to change these columns: deployment_time <- substr(as.POSIXct(sprintf("%04.0f", deployment_time), format = "%H%M"), 12, 16) retrieval_time <- substr(as.POSIXct(sprintf("%04.0f", retrieval_time, format = "%H%M"), 12, 16) # If you're interested in just pasting the event dates together: eventDate <- paste(event_start, event_finish, sep = "/") # If you're interested in including the deployment and retrieval times in the eventDate: eventDateTime_start <- lubridate::format_ISO8601(as.POSIXct(paste(event_start, deployment_time), tz = "UTC")) eventDateTime_start <- paste0(eventDateTime_start, "Z") eventDateTime_finish <- lubridate::format_ISO8601(as.POSIXct(paste(event_finish, retrieval_time), tz = "UTC")) eventDateTime_finish <- paste0(eventdateTime_finish, "Z") eventDateTime <- paste(eventDateTime_start, eventDateTime_finish, sep = "/") print(eventDate) print(eventDateTime)[1] "2021-01-30/2021-01-31" [1] "2021-01-30T10:02:00Z/2021-01-31T11:02:00Z"

Tip

When all else fails, treat the dates as strings and use substitutions/regular expressions to manipulate the strings into ISO 8601.

Matching your scientific names to a taxonomic backbone

Introduction

Working with different partners/institutes/researchers results in a diversity of taxonomic names to define species. This hardens comparison amongst datasets, as in many occasions, aggrgeation is aimed for or filtering on specific species. By translating all species names to a common taxonomic backbone (ensuring unique ID’s for each species name), this can be done.

| Darwin Core Term | Description | Example |

|---|---|---|

| scientificNameID | An identifier for the nomenclatural (not taxonomic) details of a scientific name. | urn:lsid:ipni.org:names:37829-1:1.3 |

| kingdom | The full scientific name of the kingdom in which the taxon is classified. | Animalia, Archaea, Bacteria, Chromista, Fungi, Plantae, Protozoa, Viruses |

| taxonRank | The taxonomic rank of the most specific name in the scientificName. | subspecies, varietas, forma, species, genus |

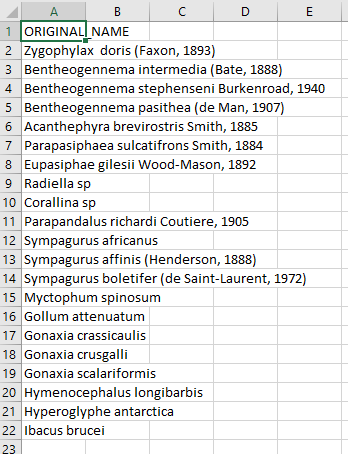

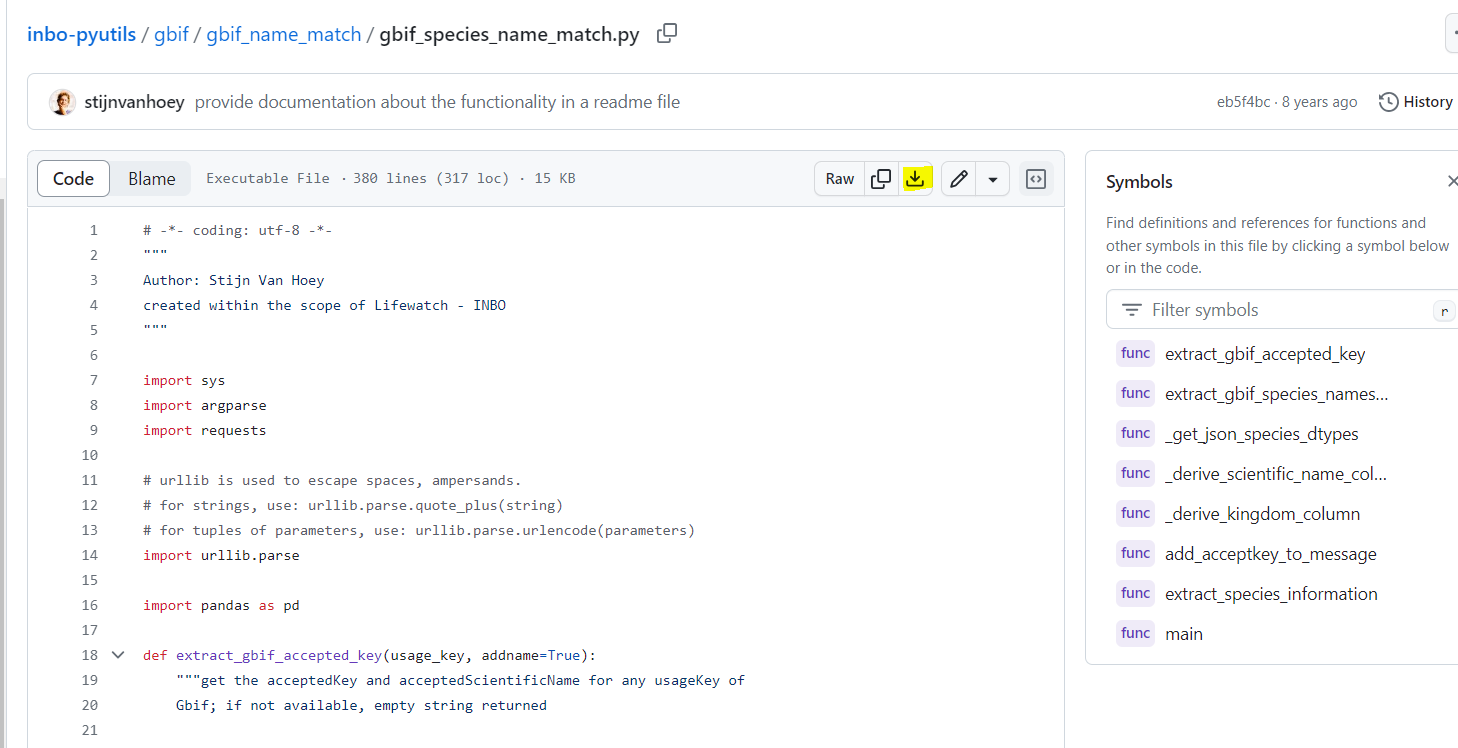

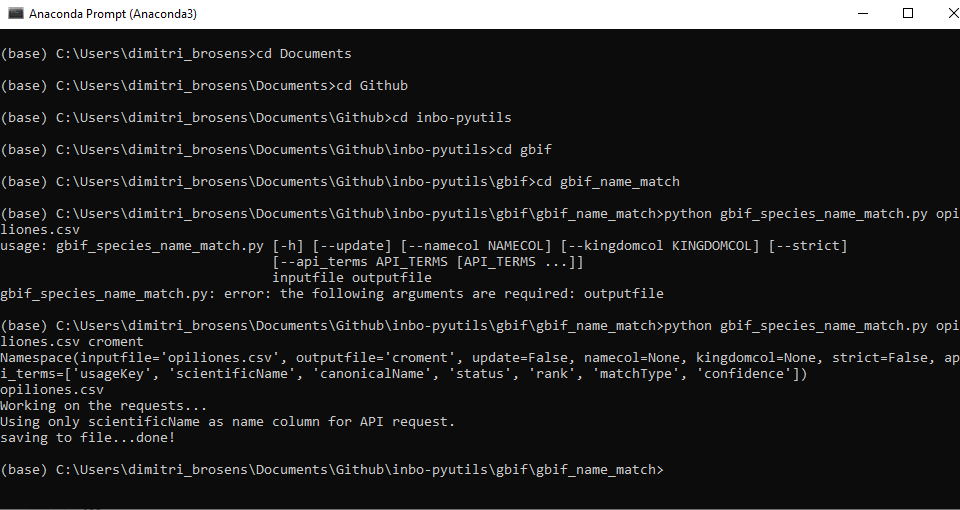

Using the commandline using Python

This small utility provides the functionality to add the species information from the GBIF backbone to any data table (CSV-style or a > Pandas dataframe) by requesting this information via the GBIF API. For each match, the corresponding accepted name is looked for. Nevertheless there will always be errors and control is still essential, the acceptedkeys provide the ability to compare species names from different data sources. The functionality can be loaded within Python itself by importing the function

extract_species_informationor by running the script from the command line. We will show you on how to use the command line

Create a folder which will be used for name matching.

Place your CSV (comma separated value) file with the scientific names of the species of interest in that folder. Here we are showing some of the contents of the file

species.csv.Place this Python file gbif_species_name_match.py in your name matching folder

Navigate in the Python terminal to the correct folder.

Run the command > python gbif_species_name_match.py yourfilename_input.csv yourfilename_output

Using the Global Names Verifier

Verify a list of scientific names against biodiversity data-sources. This service parses incoming names, executes exact or fuzzy matching as required, and returns the best-scored result. Optionally, it can also return matches from data-sources selected by a user.

Create a CSV (comma separated value) file with the scientific name of the species of interest. Here we are showing some of the contents of the file

species.csv.Copy your scientific names to the Global Names Verifier

Click on Search Names. Don’t forget to choose your output format (here choose .csv)

Hopefully, your names will be matched

- In some cases you will have ambiguous matches.

- Capy you response and use it building your Darwin Core file

Getting lat/lon to decimal degrees

Latitude (decimalLatitude) and longitude (decimalLongitude) are the geographic coordinates (in decimal degrees north and east, respectively), using the spatial reference system given in geodeticDatum of the geographic center of a location.

decimalLatitude, positive values are north of the Equator, negative values are south of it. All values lie between -90 and 90, inclusive.decimalLongitude, positive values are east of the Greenwich Meridian, negative values are west of it. All values lie between -180 and 180, inclusive.

Note, that the requirement for decimalLatitude and decimallLongitude is they must be in decimal degrees in WGS84. Since this is the requirement for Darwin Core, OBIS and GBIF will assume data shared using those Darwin Core terms are in the geodetic datum WGS84. We highly recommend checking the coordinate reference system (CRS) of your observations to confirm they are using the same datum and documenting it in the geodeticDatum Darwin Core term. If your coordinates are not using WGS84, they will need to be converted in order to share the data to OBIS and GBIF since decimalLatitude and decimalLongitude are required terms.

Helpful packages for managing CRS and geodetic datum:

Tip

If at all possible, it’s best to extract out the components of the information you have in order to compile the appropriate field. For example, if you have the coordinates as one lone string

17° 51' 57.96" S 149° 39' 13.32" W, try to split it out into its component pieces:17,51,57.96,S,149,39,13.32, andWjust be sure to track which values are latitude and which are longitude.

| Darwin Core Term | Description | Example |

|---|---|---|

| decimalLatitude | The geographic latitude (in decimal degrees, using the spatial reference system given in geodeticDatum) of the geographic center of a Location. Positive values are north of the Equator, negative values are south of it. Legal values lie between -90 and 90, inclusive. | -41.0983423 |

| decimalLongitude | The geographic longitude (in decimal degrees, using the spatial reference system given in geodeticDatum) of the geographic center of a Location. Positive values are east of the Greenwich Meridian, negative values are west of it. Legal values lie between -180 and 180, inclusive. | -121.1761111 |

| geodeticDatum | The ellipsoid, geodetic datum, or spatial reference system (SRS) upon which the geographic coordinates given in decimalLatitude and decimalLongitude as based. | WGS84 |

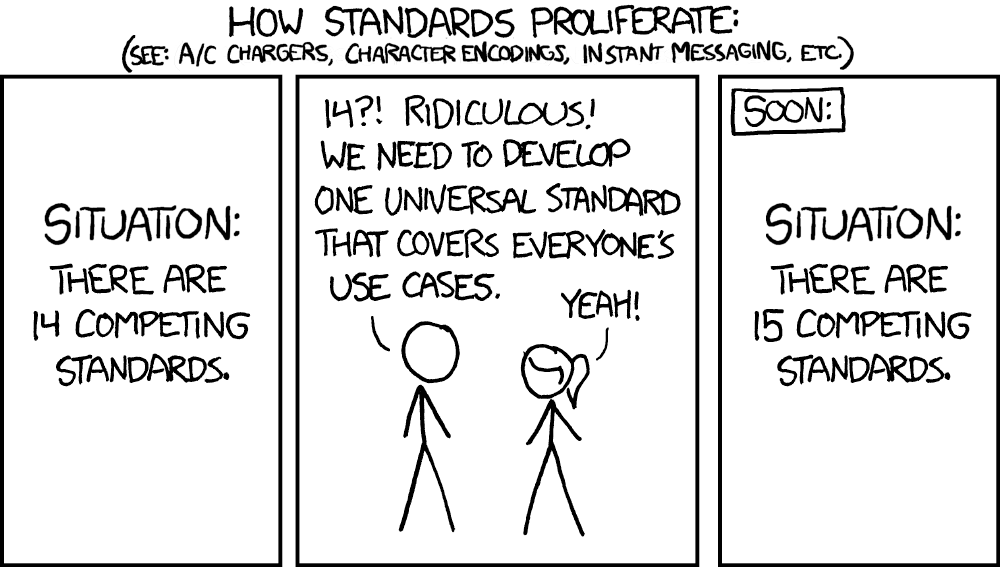

Image credit: xkcd

Image credit: xkcd

Examples in Python

17° 51' 57.96" S149° 39' 13.32" W

- This example assumes you have already split the two strings into discrete components (as shown in the table). An example converting the full strings

17° 51' 57.96" S149° 39' 13.32" Wto decimal degrees can be found here.

lat_degrees lat_minutes lat_seconds lat_hemisphere lon_degrees lon_minutes lon_seconds lon_hemisphere 17 51 57.96 S 149 39 13.32 W df = pd.DataFrame({'lat_degrees':[17], 'lat_minutes':[51], 'lat_seconds':[57.96], 'lat_hemisphere':['S'], 'lon_degrees': [149], 'lon_minutes': [39], 'lon_seconds':[13.32], 'lon_hemisphere': ['W'], }) df['decimalLatitude'] = df['lat_degrees'] + ( (df['lat_minutes'] + (df['lat_seconds']/60) )/60) df['decimalLongitude'] = df['lon_degrees'] + ( (df['lon_minutes'] + (df['lon_seconds']/60) )/60) # Convert hemisphere S and W to negative values as units should be `degrees North` and `degrees East` df.loc[df['lat_hemisphere']=='S','decimalLatitude'] = df.loc[df['lat_hemisphere']=='S','decimalLatitude']*-1 df.loc[df['lon_hemisphere']=='W','decimalLongitude'] = df.loc[df['lon_hemisphere']=='W','decimalLongitude']*-1 df[['decimalLatitude','decimalLongitude']]decimalLatitude decimalLongitude -17.8661 -149.653733° 22.967' N117° 35.321' W

- Similar to above, this example assumes you have already split the two strings into discrete components (as shown in the table).

lat_degrees lat_dec_minutes lat_hemisphere lon_degrees lon_dec_minutes lon_hemisphere 33 22.967 N 117 35.321 W df = pd.DataFrame({'lat_degrees':[33], 'lat_dec_minutes':[22.967], 'lat_hemisphere':['N'], 'lon_degrees': [117], 'lon_dec_minutes': [35.321], 'lon_hemisphere': ['W'], }) df['decimalLatitude'] = df['lat_degrees'] + (df['lat_dec_minutes']/60) df['decimalLongitude'] = df['lon_degrees'] + (df['lon_dec_minutes']/60) # Convert hemisphere S and W to negative values as units should be `degrees North` and `degrees East` df.loc[df['lat_hemisphere']=='S','decimalLatitude'] = df.loc[df['lat_hemisphere']=='S','decimalLatitude']*-1 df.loc[df['lon_hemisphere']=='W','decimalLongitude'] = df.loc[df['lon_hemisphere']=='W','decimalLongitude']*-1 df[['decimalLatitude','decimalLongitude']]decimalLatitude decimalLongitude 0 33.382783 -117.588683

Examples in R

17° 51' 57.96" S149° 39' 13.32" W

lat_degrees lat_minutes lat_seconds lat_hemisphere lon_degrees lon_minutes lon_seconds lon_hemisphere 17 51 57.96 S 149 39 13.32 W library(tibble) tbl <- tibble(lat_degrees = 17, lat_minutes = 51, lat_seconds = 57.96, lat_hemisphere = "S", lon_degrees = 149, lon_minutes = 39, lon_seconds = 13.32, lon_hemisphere = "W") tbl$decimalLatitude <- tbl$lat_degrees + ( (tbl$lat_minutes + (tbl$lat_seconds/60)) / 60 ) tbl$decimalLongitude <- tbl$lon_degrees + ( (tbl$lon_minutes + (tbl$lon_seconds/60)) / 60 ) tbl$decimalLatitude = as.numeric(as.character(tbl$decimalLatitude))*(-1) tbl$decimalLongitude = as.numeric(as.character(tbl$decimalLongitude))*(-1)> tbl$decimalLatitude [1] -17.8661 > tbl$decimalLongitude [1] -149.6537

33° 22.967' N117° 35.321' W

lat_degrees lat_dec_minutes lat_hemisphere lon_degrees lon_dec_minutes lon_hemisphere 33 22.967 N 117 35.321 W library(tibble) tbl <- tibble(lat_degrees = 33, lat_dec_minutes = 22.967, lat_hemisphere = "N", lon_degrees = 117, lon_dec_minutes = 35.321, lon_hemisphere = "W") tbl$decimalLatitude <- tbl$lat_degrees + ( tbl$lat_dec_minutes/60 ) tbl$decimalLongitude <- tbl$lon_degrees + ( tbl$lon_dec_minutes/60 ) tbl$decimalLongitude = as.numeric(as.character(tbl$decimalLongitude))*(-1)> tbl$decimalLatitude [1] 33.38278 > tbl$decimalLongitude [1] -117.5887

33° 22.967' N117° 35.321' W

- Using the measurements package the

conv_unit()can work with space separated strings for coordinates.

lat lat_hemisphere lon lon_hemisphere 33 22.967 N 117 35.321 W tbl <- tibble(lat = "33 22.967", lat_hemisphere = "N", lon = "117 35.321", lon_hemisphere = "W") tbl$decimalLongitude = measurements::conv_unit(tbl$lon, from = 'deg_dec_min', to = 'dec_deg') tbl$decimalLongitude = as.numeric(as.character(tbl$decimalLongitude))*(-1) tbl$decimalLatitude = measurements::conv_unit(tbl$lat, from = 'deg_dec_min', to = 'dec_deg')> tbl$decimalLatitude [1] 33.38278 > tbl$decimalLongitude [1] -117.5887

You can find some more tutorials on data transformation and publication on the INBO tutorial page: https://inbo.github.io/tutorials/

Key Points

When doing conversions it’s best to break out your data into it’s component pieces.

Dates are messy to deal with. Some packages have easy solutions, otherwise use regular expressions to align date strings to ISO 8601.

Latitude and longitudes are like dates, they can be messy to deal with. Take a similar approach.

GBIF community forum & helpdesk

Overview

Teaching: 30 min

Exercises: 0 minQuestions

What is the GBIF community forum

What is the GBIF helpdesk

What is the Technical support hour for GBIF nodes

Objectives

Learn more on how the GBIF community is ready to help

Presentation: The GBIF community forum

Excercise : Browse the Community forum

- Create an account on the community forum

- Check this post and check this out on gbif(www.gbif.org) extra_info

- Browse the forum a bit

SOLUTION

- That was easy

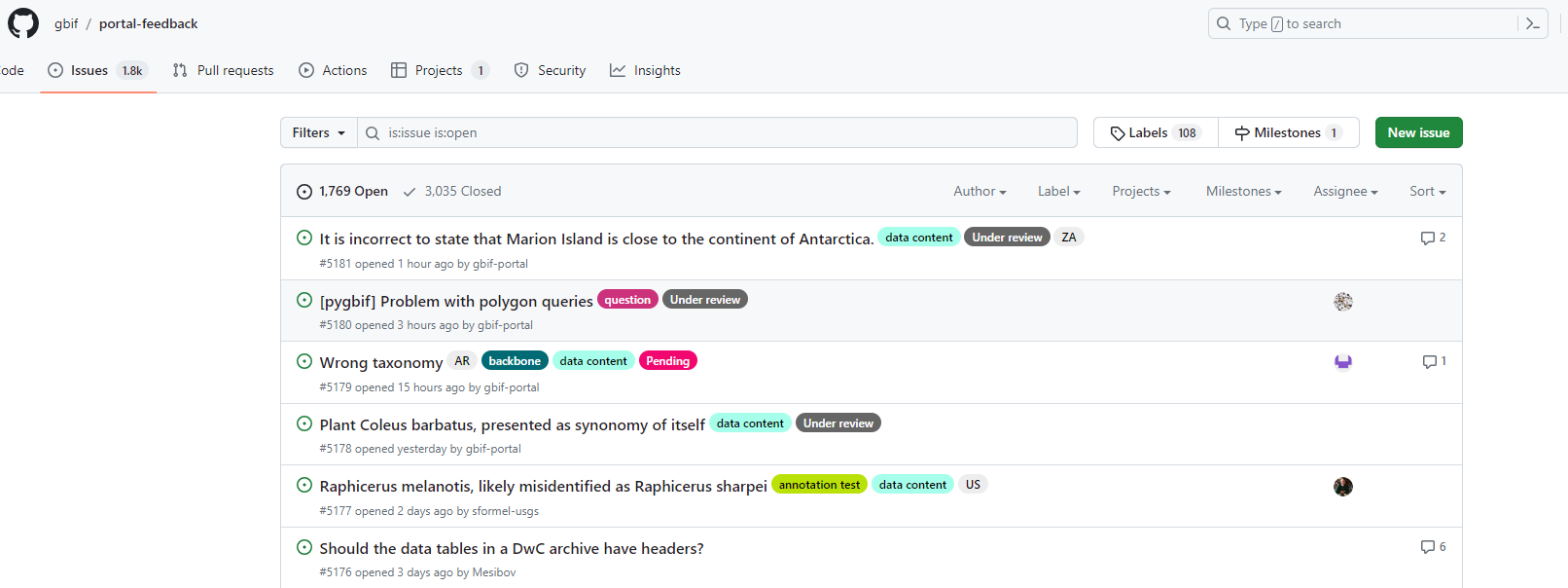

Presentation: The GBIF Helpdesk

GBIF portal ‘Feedback system’

Instructions

- Create an account on Github

- Instead of sending bugs or ideas to helpdesk@gbif.org you can also use Github issues

- On the right upper corner of the GBIF portal clik here:

You can choose different options

content

Bug

Idea

HelpdeskSOLUTION

- That was easy

Key Points

You are not alone in this world

Lunch Break

Overview

Teaching: min

Exercises: minQuestions

Objectives

Key Points

Darwin Core+Extensions archive

Overview

Teaching: 20 min

Exercises: 60 minQuestions

What is a core and what are the extensions in Darwin Core?

How to organize my data and metadata?

How to create Darwin Core Archive

Objectives

Creating IDs and using them

Creating core and extensions files.

Darwin Core Extensions

Now that we have a firm basis for understanding the different terms in Darwin Core the next part to understand is how data tables are organized and the difference between cores and extensions. You will always have a core table (Occurrence core or Event core) with either no extensions or several. What you choose depends on the data you have and how to represent it best. The original Darwin Core core is the Occurrence core. Once people started using that core they began to see that they needed extensions to that core to best represent the data they were trying to share and therefore several extensions have been developed (and are continuing to be developed). As more monitoring data has been shared over time, another core type called Event core was added. Without getting too far into the weeds on the cores and extensions, what’s most important to understand is that you need to pick your core type and once you do that then you pick the extensions to go with it. For example, if your data took place as part of an event (cruise, transects, etc) you will pick Event core. If there was no sampling event, then you will pick Occurrence core.

Different options for sharing the data

Occurrence Core only

The bare minimum for sharing data to OBIS is to use the Occurrence Core with no extensions. This core type covers datasets that only include observations and/or specimen records where no information on sampling is available. Occurrence core is also used for eDNA or DNA derived data.

The Occurrence core allows you to provide all the required Darwin Core terms detailed in the intro section. You can produce a fully compliant Darwin Core version of your data using only the Occurrence core (see this example by Tylar Murray). On the one hand, if the data were collected using some kind of sampling methodology, you will lose much of that information if you use this most simple form of the data. One the other, it is faster and easier to produce.

Thought Experiment

Look at the minimum required fields example. What is possible to do in future reuse? What would not be possible? For instance, note that there is no information about depth or the uncertainty of the coordinates. For more examples check out the Datasets folder in the IOOS Bio Data Guide.

Occurrence Core + extensions

Using the Occurrence core plus relevant extensions means that you can capture more of the data that’s been recorded. As an example, let’s consider an environmental DNA dataset. eDNA datasets have information that is unique to that method and will not be represented well using Occurrence core only. To document eDNA using Darwin Core you should follow this guide; you will need the Occurrence core plus the DNA derived data extension. Adding the DNA derived data extension allows you to capture information such as the PCR primer used, DNA sequences, standard operating procedure used in the assembly and other information specific to this type of data.

Let’s consider another example: a museum dataset that has biological measurements for each individual specimen (e.g. length). All information about each organism’s occurrence (taxonomic information, locality, identification, etc.) will go into the Occurrence core. You can then capture the biotic measurement information (type of measurement, units, accuracy, etc.) by using either the Measurement or Facts extension, or the Extended Measurement or Fact extension (we elaborate on this extension below). Note again here we do not have information on how the organisms were sampled.

Checklist Core + extensions

Suitable for publication of Taxonomic data, still in use but ColDP standard is about to replace that.

Event Core + extensions

As we have indicated earlier, the Event core is for datasets that include known sampling events - details are known about how, when, and where samples were taken.

An innovation that OBIS made in this space was introducing the Extended Measurement or Fact extension (also sometimes referred to as OBIS-ENV-DATA, or eMoF). This uses the Event core with an Occurrence extension + the extended Measurement or Fact extension. The eMoF extension makes it possible to include measurements for both the events (salinity, temperature, dissolved oxygen, gear type, etc.) as well as measurements about the occurrences (weight, length, etc.). Prior to this you were only able to include measurements of the occurrence (in the Measurement or Facts extension).

When these types of measurement data were collected, they may have each had their own column in your dataset. However, the Extended Measurement of Fact extension does not format data in this way. Rather than documenting each of your measurements in separate columns, measurements will be condensed into one column: measurementValue (e.g. 15). Then, to tell us what that value is, there is the column measurementType which describes what the measurement actually is (e.g. length). Finally the column measurementUnit is used to indicate the unit of the measurement (e.g. cm).

Now, you may wonder - what do you write in the “measurementType” field? For some measurements, it may be simple. For others, maybe not as simple. The good news is this field is unconstrained - you can populate it with free text as you like. But what if you were interested in getting all records that have “length” measurements from OBIS? Due to the inevitable heterogeneity in how different people would document “length”, you would have to try to account for all these different ways!

The key thing about the extended Measurement or Fact extension that gets around this challenge, is that it provides a way to include Unique Resource Identifiers (URIs). These URIs are used to populate the measurementTypeID field (as well as measurementUnitID and measurementValueID). URIs mean that if you call the measurementType “abundance” but I call it “Abundance per square meter” and we both use the measurementTypeID “http://vocab.nerc.ac.uk/collection/P01/current/SDBIOL02/” then we know this is the same measurement type even if we didn’t use the same free text words to describe it. Choosing the right URI can be difficult but you can read about finding codes here. All you need to know for now is that you should try to find a measurementTypeID URI that belongs to the P01 collection. OBIS is developing guidelines to help you with the process of choosing URIs, so stay tuned to their manual for updates.

Tip

Consider to check the Datasets classes pages and the Data Quality requirements for each of them.

What’s in an ID?

| Darwin Core Term | Description | Example |

|---|---|---|

| eventID | An identifier for the set of information associated with an Event (something that occurs at a place and time). May be a global unique identifier or an identifier specific to the data set. | INBO:VIS:Ev:00009375Station_95_Date_09JAN1997:14:35:00.000 FFS-216:2007-09-21:A:replicateID1024 |

| occurrenceID | An identifier for the Occurrence (as opposed to a particular digital record of the occurrence). In the absence of a persistent global unique identifier, construct one from a combination of identifiers in the record that will most closely make the occurrenceID globally unique. | urn:catalog:UWBM:Bird:89776 Station_95_Date_09JAN1997:14:35:00.000_Atractosteus_spatula FFS-216:2007-09-21:A:replicateID1024:objectID1345330 |

| measurementID | An identifier for the MeasurementOrFact (information pertaining to measurements, facts, characteristics, or assertions). May be a global unique identifier or an identifier specific to the data set. | 9c752d22-b09a-11e8-96f8-529269fb1459 |

IDs are the keys in your data that are used to link tables together. For example, an occurenceID in the Extended Measurement or Fact table records information about an organism with the same occurrenceID within the Occurrence core table. IDs are also the keys that keep track of each of the records, so that if you notice a mistake or missing information you can keep the record in place in the global aggregators and fix the mistake or add the missing information. For instance, let’s say you have a record with an occurrenceID Station_95_Date_09JAN1997:14:35:00.000_Atractosteus_spatula and after it’s published to OBIS you notice that the latitude was recorded incorrectly. When you fix that record in the data you would keep the occurrenceID Station_95_Date_09JAN1997:14:35:00.000_Atractosteus_spatula, fix the latitude, and republish the data so that the record is still present in OBIS but you have fixed the mistake.

With that in mind what is the best way to create an eventID, occurrenceID, or measurementID? Until we have a system that mints Persistent Identififers for individual records then the best way we have seen is to build the ID from information in the data itself. That way if you need to update or fix a record you simply use the same information again to build the same ID for the same record. Take our example above Station_95_Date_09JAN1997:14:35:00.000_Atractosteus_spatula. This is a concatenation of information from the original source data of the Station number + Verbatim Date + Scientific name. Because this is unique for each row in the occurrence file and we have kept the original data in its original format we can always rebuild this ID by concatenating this same information together again.

It is very important that these IDs do not change over time. So if an ID for a museum specimen is built from e.g. the institution the specimen is being held at, but then the specimen changes institutions - its ID should not change to reflect the move. If the ID changes then the record will be duplicated in the global database and record information could be lost over time.

Exercise Time!

Now, let’s try a practical use case where a birds watchers group send you their data. see explanations

1. Initial checks

- yes

- yes

- not everything cristal clear

- Metadata is not complete

- Event Core

- Occurrence and MeasurementOrFact

- Yes

- Maybe geodeticDatum, coordinatesUncertainty…

- Yes into event, occurrence and measurement

- Occurrence identifiers are missing

2. Data cleaning

- ScientificNames shall appear on each row

- Colors, if meaningful, shall be added as column

- Missing data should be null

- OccurrenceIDs are missing, we suggest to use spreadsheet rowID

- Incorrect eventIDs shall be removed or corrected

3. DarwinCore mapping

- Original data shall be organized in event, occurrence and measurements

- Metadata should be more elaborated : Taxonomic, geographic, time scope…

- License/waiver should be selected

- Fields name should correspond to DarwinCore terms

Key Points

Darwin Core star schema with core and extensions to model the multitude of biological observation data.

Identifiers fields are important keys in your data and we recommend building them from the information in your data.

Minimum Data fields Requirements for each class.

Linking Core entities with the extensions.

Break

Overview

Teaching: min

Exercises: minQuestions

Objectives

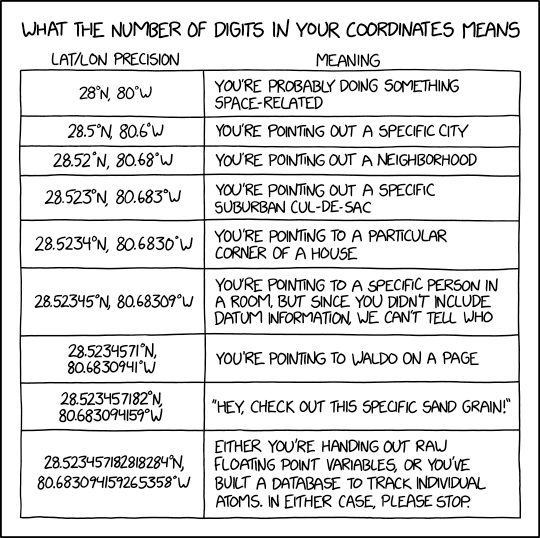

Image credit: xkcd

Image credit: xkcd

Key Points

Metadata

Overview

Teaching: 0 min

Exercises: 30 minQuestions

How are my data published?

What metadata are required for publishing?

Objectives

Showing data publishing pipeline

Introducing the IPT

Introduction to EML

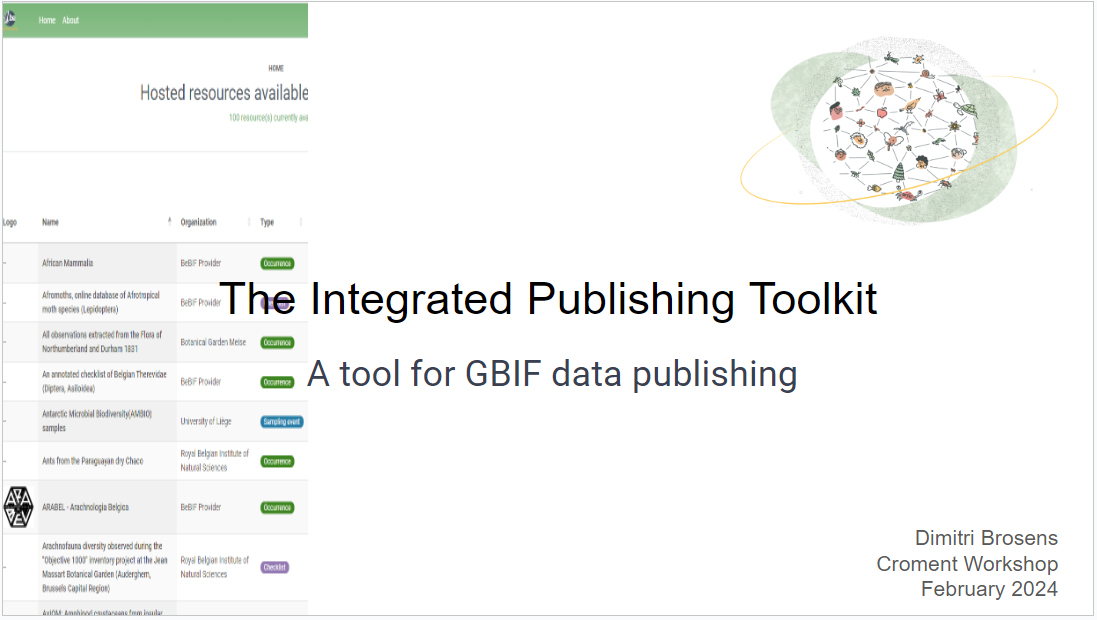

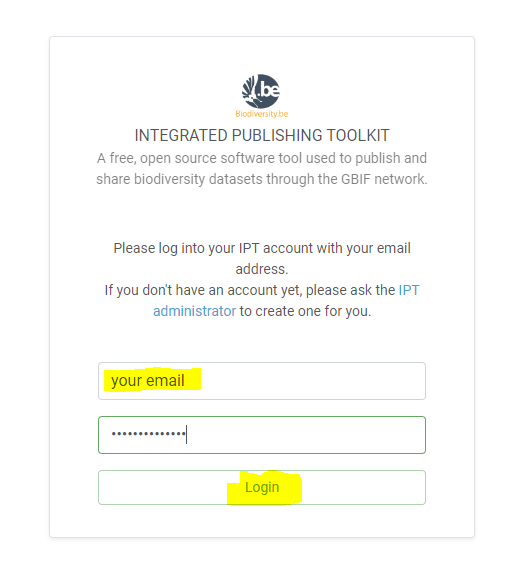

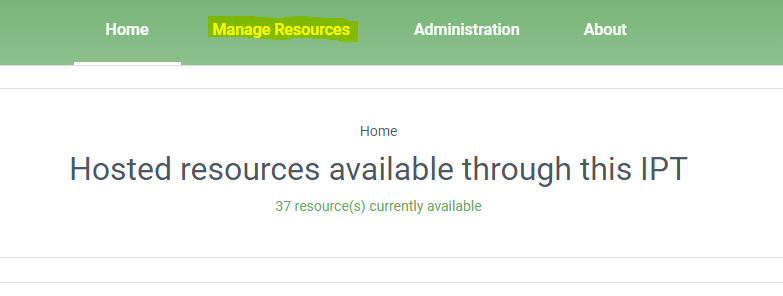

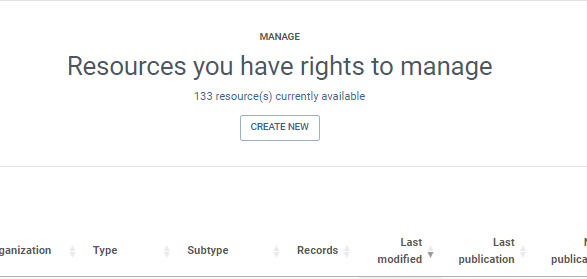

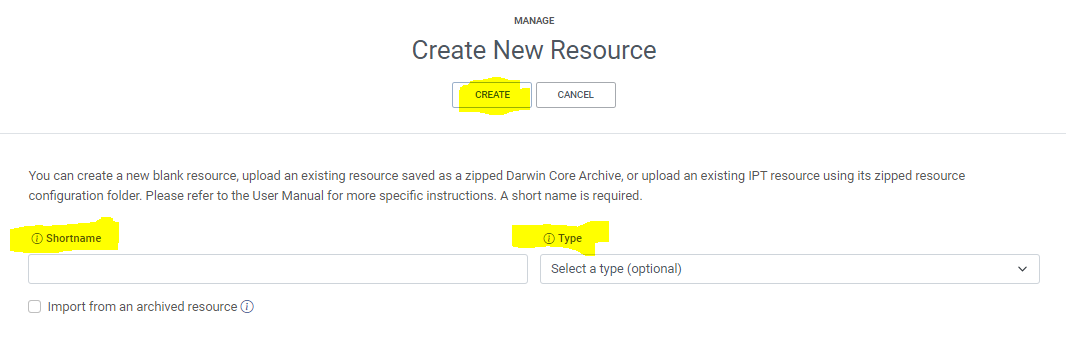

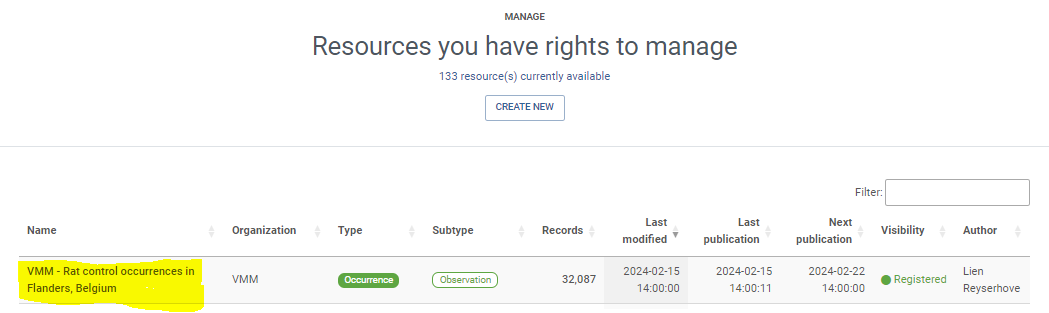

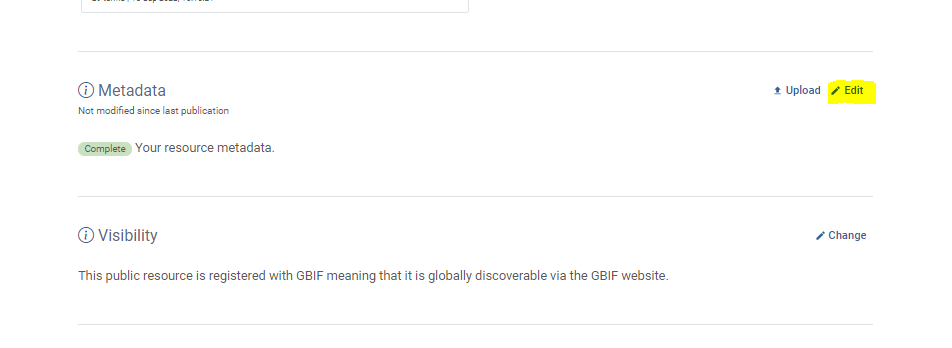

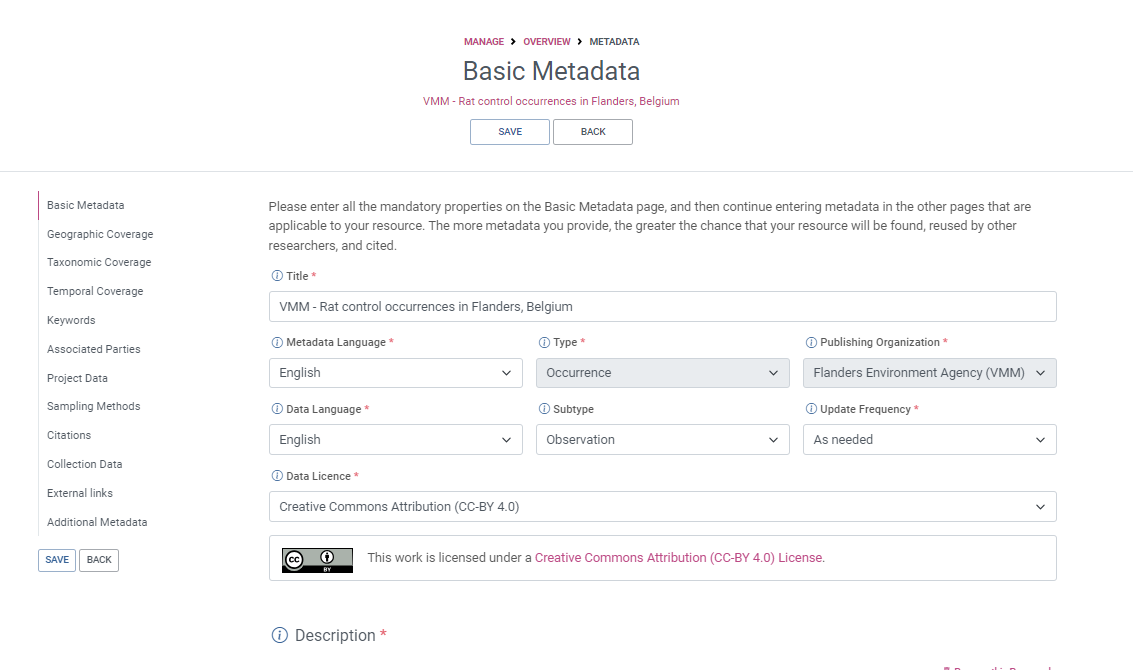

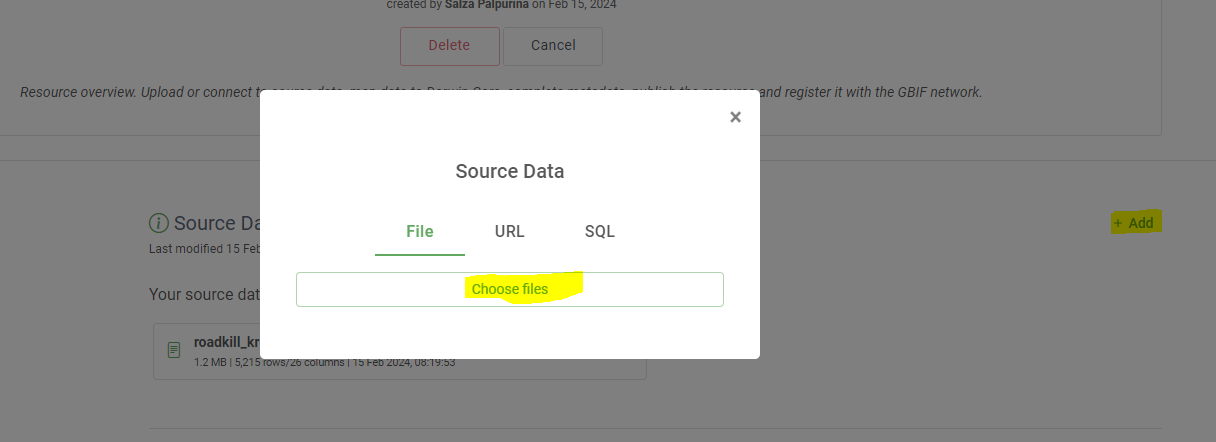

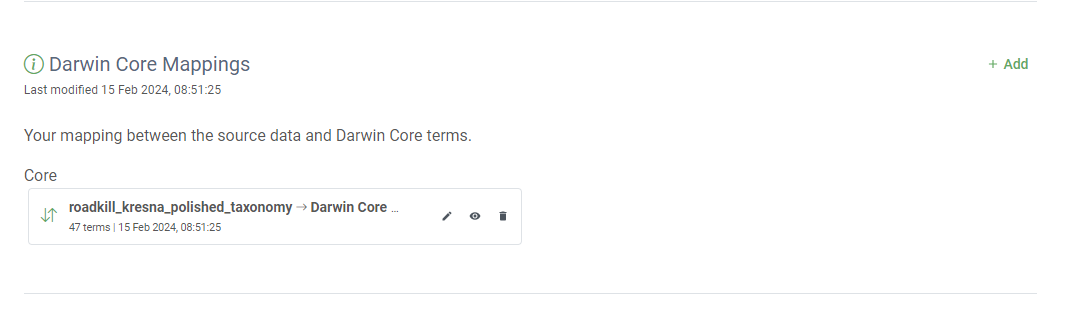

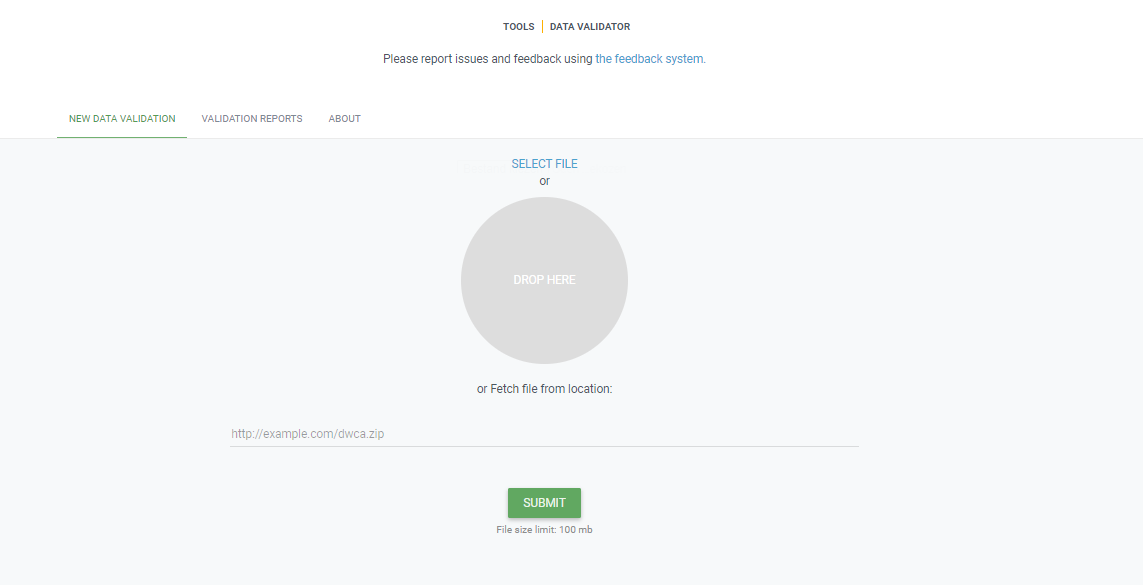

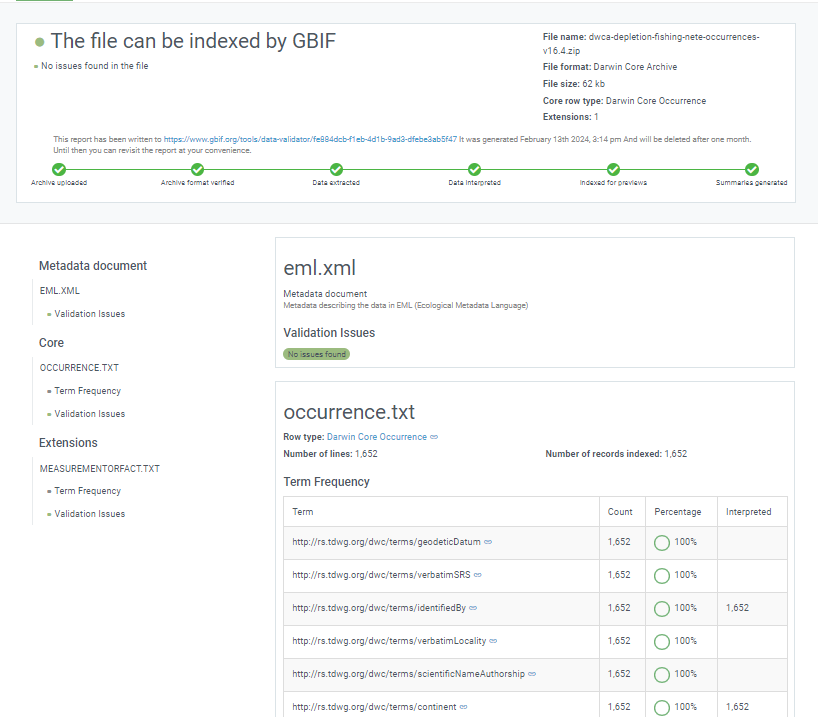

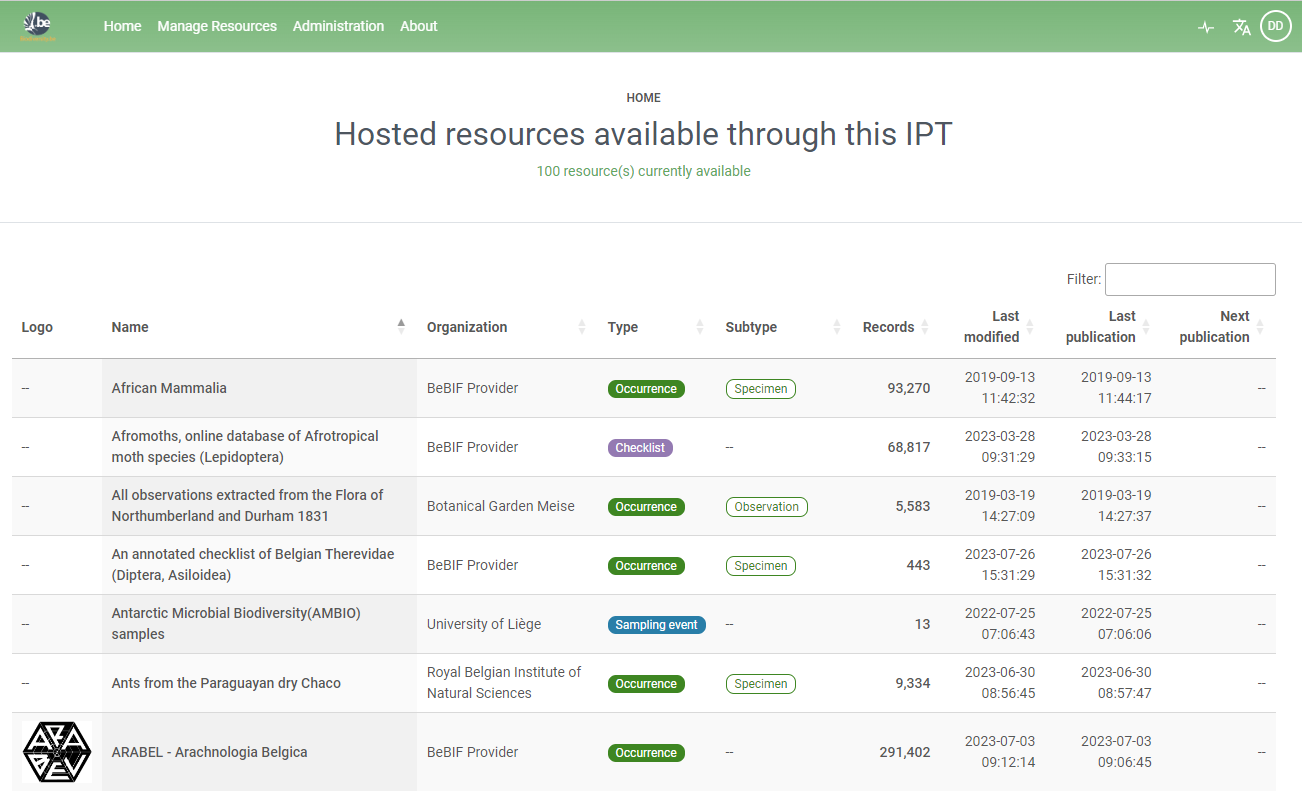

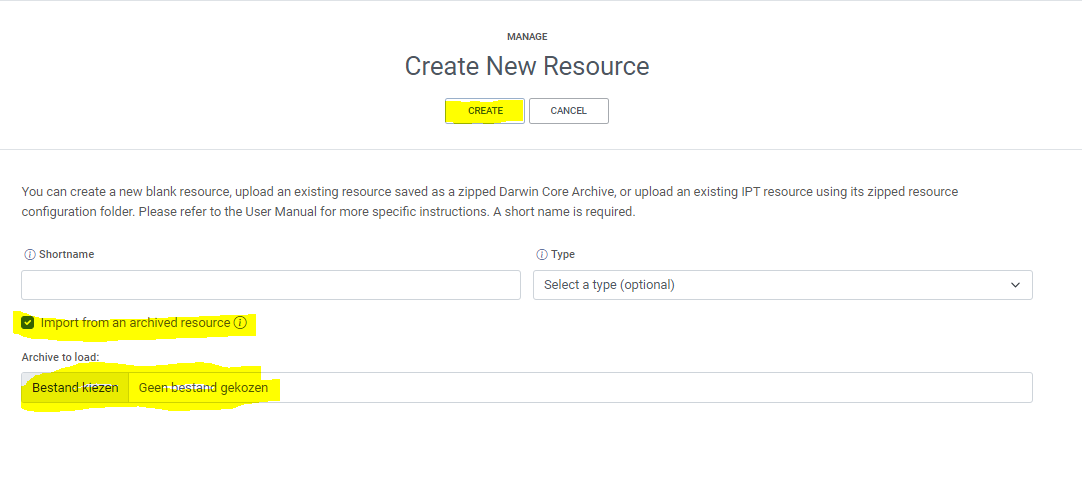

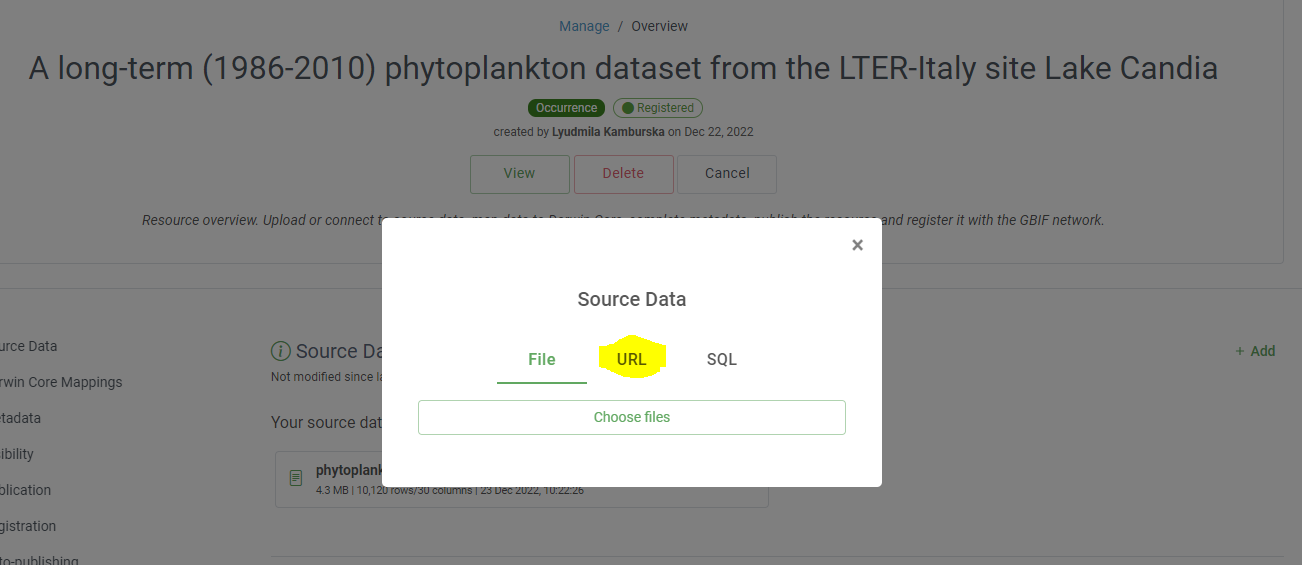

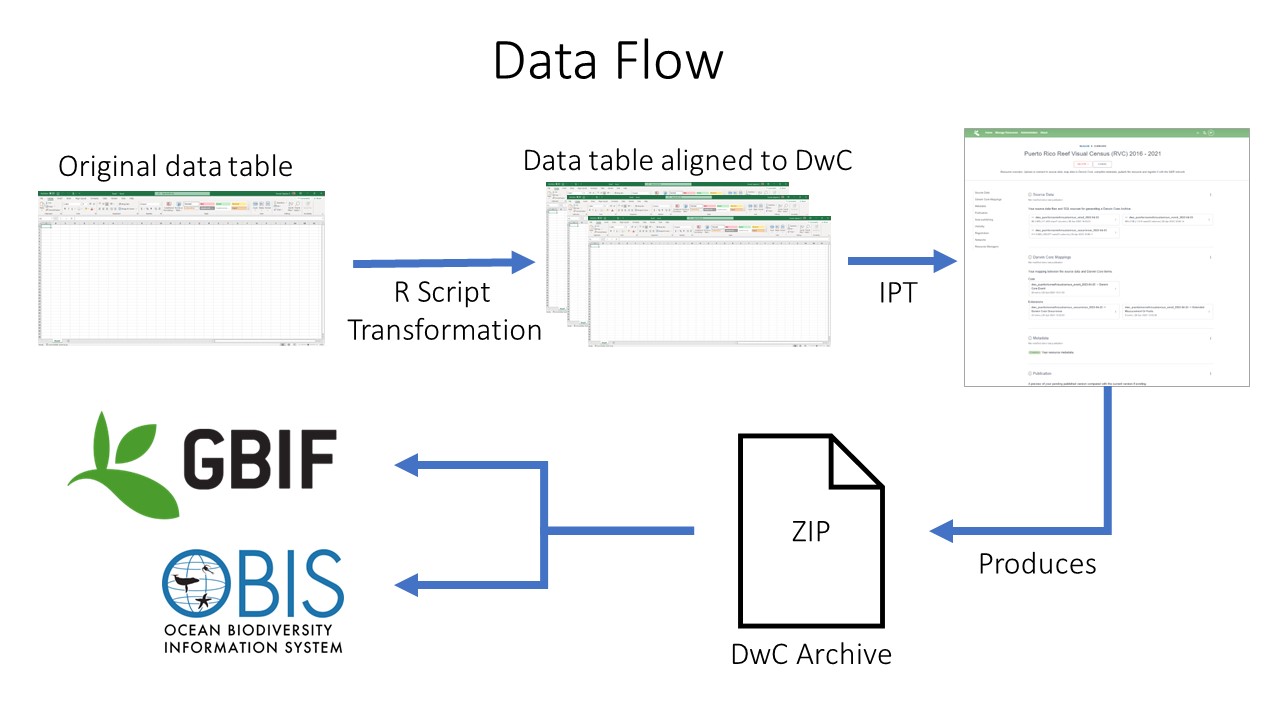

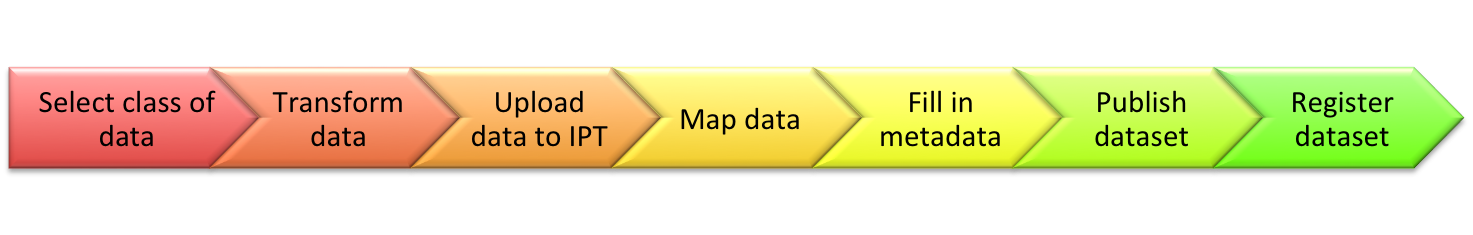

Integrated Publishing Toolkit

The Integrated Publishing Toolkit (IPT) is an open-source web application developed and maintained by the Global Biodiversity Information Facility (GBIF) for publishing biodiversity data. The IPT makes it easy to share four types of biodiversity-related information:

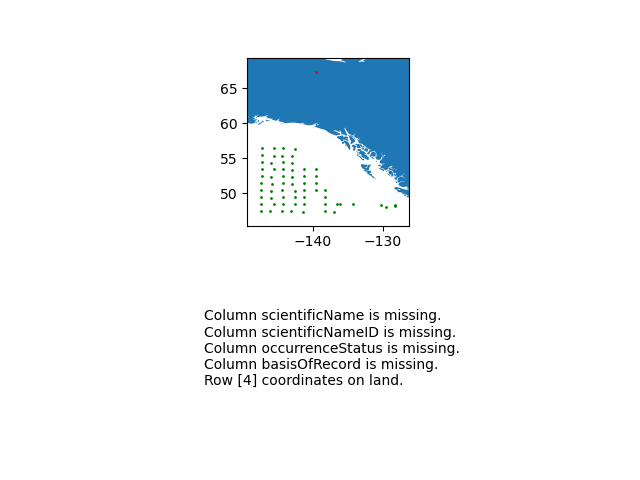

- primary taxon occurrence data

- sampling event data

- general metadata about data sources

- taxon checklists